Classifying image content with the Vision framework

Learn how to use the Vision framework to classify images in a SwiftUI application.

Classifying an image based on its content is one of the image processing operations that can be performed using the Vision framework. This reference article shows how to use the ClassifyImageRequest object to easily read the content of an image.

You need to have Xcode 16.0 and a device with iOS 18. The simulator is not able to run this request, if you use it you will encounter the following error:

Error

Domain=NSOSStatusErrorDomain

Code=-1 "Failed to create espresso context."

UserInfo={NSLocalizedDescription=Failed to create espresso context.}ClassifyImageRequest is a request that returns information describing an image as a collection of ClassificationObservation and to use it, we need to import the Vision framework.

import VisionCreates an asynchronous function that will classify the content of the image.

func classify(_ image: UIImage) async throws -> [ClassificationObservation]? {

// Image to be classified

guard let image = CIImage(image: image) else {

return nil

}

do {

// Set up the classification request

let request = ClassifyImageRequest()

// Perform the classification request on the image and get back results

let results = try await request.perform(on: image)

return results

} catch {

print("Encountered an error when performing the request: \(error.localizedDescription)")

}

return nil

}The function classify(_:) works as follows:

- Stores the image as an

CIImageobject; - Creates an instance of

ClassifyImageRequest; - Perform the request with the image we have just stored using the method

perform(on:orientation:). The request will return an array ofClassificationObservation, a type that represents classification information produced by the image-analysis request.

Access the classifications

Each ClassificationObservation stores among all its properties the confidence and the identifier, which is respectively, the level of confidence in the observation’s accuracy represented by a float value in a range of 0.0 to 1.0, where 1.0 means being very confident, and the label of the observation.

As the observations collection contains the observations produced for all the supported identifiers, no matter what the confidence value is, we need to filter them down to select only the ones within a relevant range of confidence.

The following function filters the results by omitting those whose rate confidence is lower than 0.1.

private func filterIdentifiers(from observations: [ClassificationObservation]) -> [String]{

var filteredIdentifiers = [String]()

// Loop through the results to catch those with +0.1 confidence

for observation in observations {

if observation.confidence > 0.1 {

filteredIdentifiers.append(observation.identifier)

}

}

return filteredIdentifiers

}Integration in a SwiftUI view

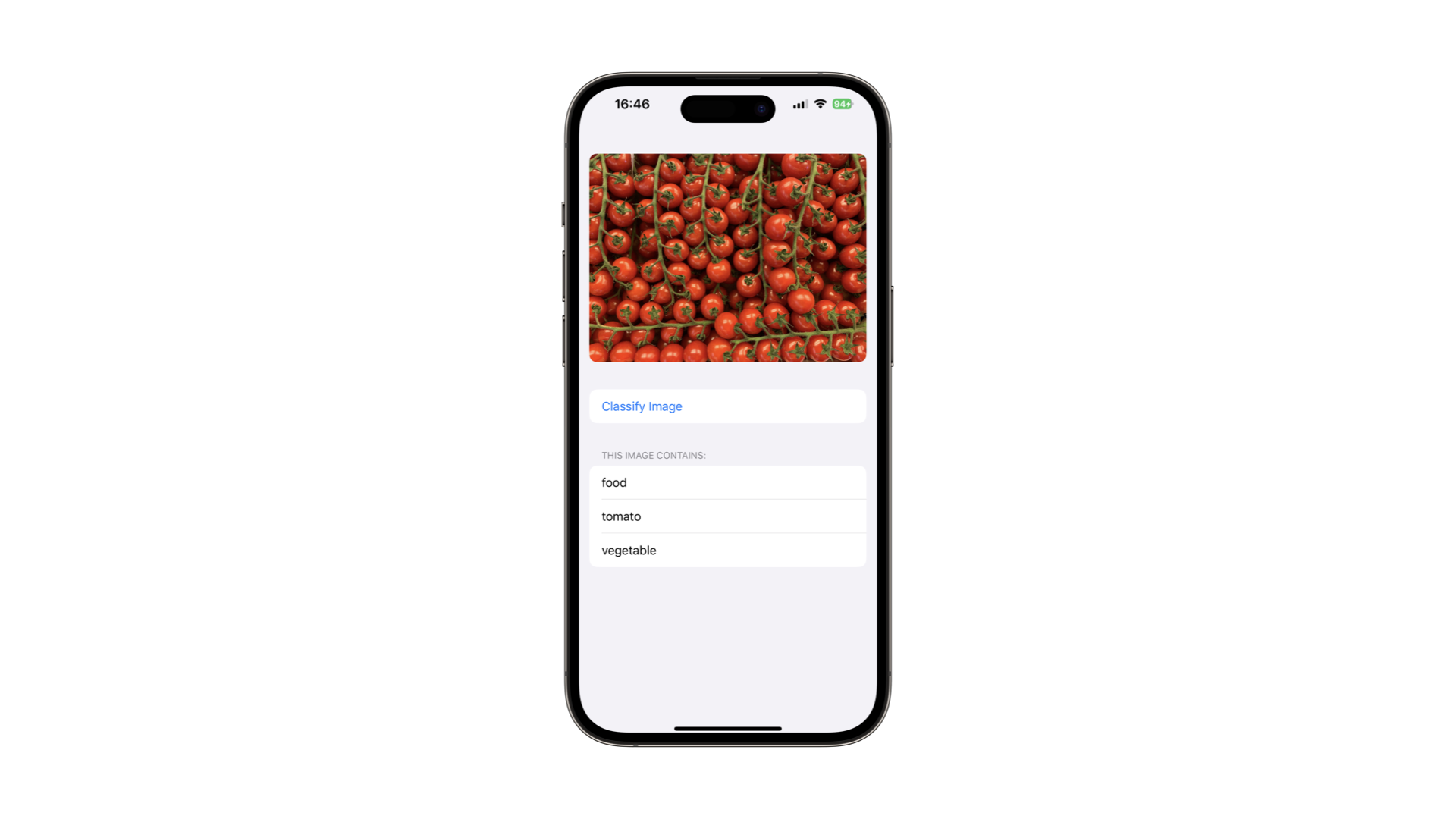

Here is a simple SwiftUI view showcasing the usage of the functions above.

import SwiftUI

import Vision

struct ClassifyImageView: View {

@State var classifications = [String]()

var body: some View {

Form {

Section{

Image("tomatoes")

.resizable()

.aspectRatio(contentMode: .fit)

.listRowInsets(EdgeInsets(top: 0, leading: 0, bottom: 0, trailing: 0))

}

Button {

self.classifyImage()

} label: {

Text("Classify Image")

}

if classifications.isEmpty {

Section {

Text("Image not classified yet")

.foregroundStyle(.secondary)

}

} else {

Section {

List(classifications, id: \.self) { result in

Text(result)

}

} header: {

Text("This image contains:")

}

}

}

}

private func classifyImage() {

Task {

guard let image = UIImage(named: "tomatoes") else { return }

let observations = try await classify(image)

if let observations = observations {

classifications = filterIdentifiers(from: observations)

}

}

}

private func classify(_ image: UIImage) async throws -> [ClassificationObservation]? {

...

}

private func filterIdentifiers(from observations: [ClassificationObservation]) -> [String] {

...

}

}It has a button that when pressed performs the classification of the example image and updates a state variable called classifications with the filtered labels from the results of the classification. They are then displayed in a list.