Designing for Apple Intelligence: Extending your app features to the system

Understand how bringing your app features to the system can improve your users' experience.

App development has profoundly changed in a few years with the introduction of generative AI and complex machine learning models. The introduction of Generative AI-powered chatbots has redefined how users interact with machines, enabling communication through natural language instead of relying on traditional input methods. This shift is drastically reducing friction by simplifying complex processes into easy-to-understand commands.

As this type of interaction becomes the standard, users now expect to interact with their devices similarly, using their voice and natural language commands. Technologies like Apple Intelligence and the evolving Siri play a key role in facilitating this voice-first interaction with our devices.

For developers, adapting to this shift is essential for making apps compatible with these new technologies. The App Intent framework is central to this process, exposing functionality to system services as a voice assistant. In previous articles, we’ve already explored the significance of implementing intents to integrate both app actions and data into the system.

To start this process, designers have the responsibility of identifying the most important actions and data that need to be shared with the system so that they are mapped to voice interactions that Siri can easily process.

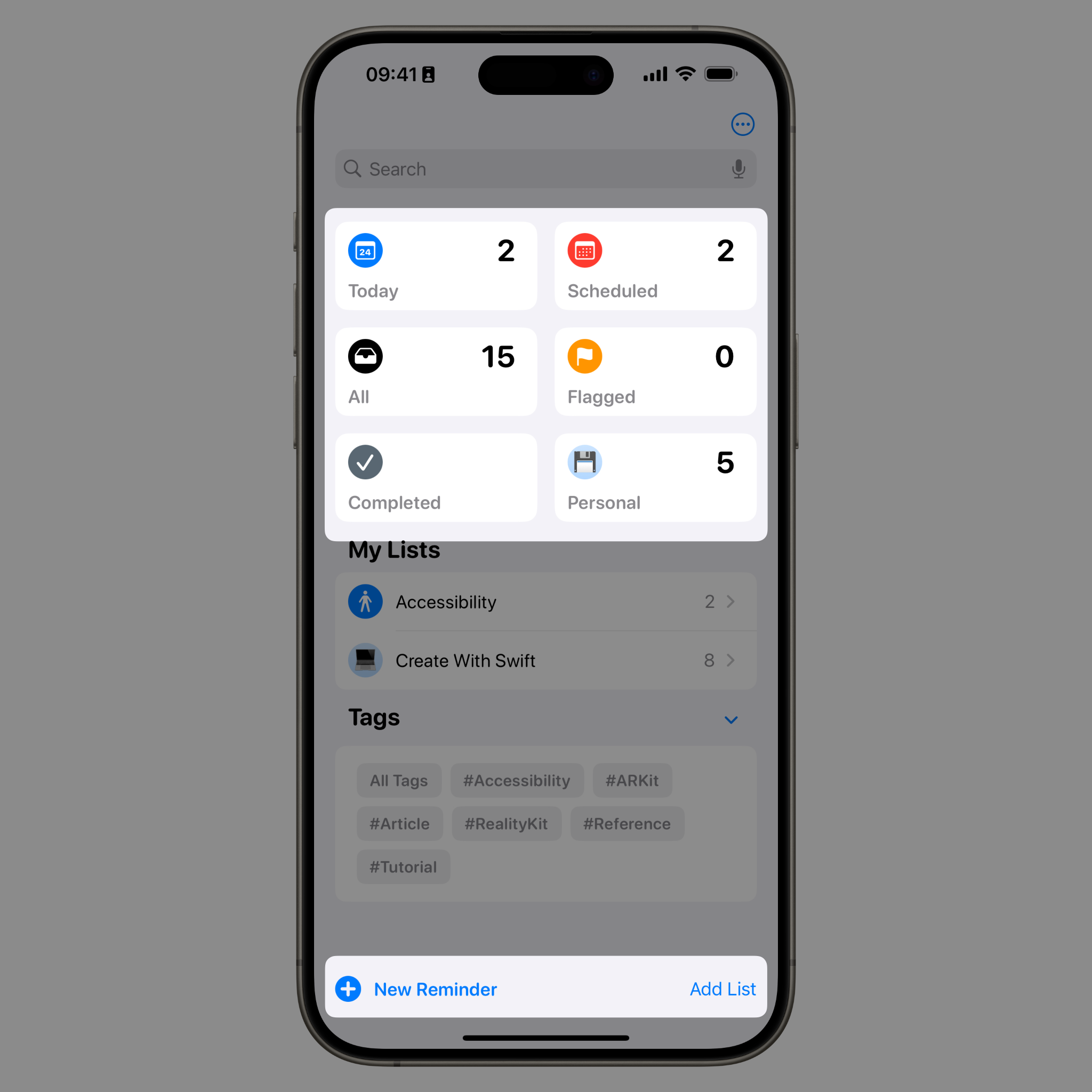

An example of this can be seen in the Reminders app, which we can use to demonstrate how this process works. When you first open the Reminders app, users are presented with the information stored in the app and two core actions:

- New Reminder: The user can create a new reminder in one of their existing lists, including details like title, notes, dates, tags, and more.

- New List: The user can create a new list by selecting a name, symbol, and color.

These two actions are perfect candidates for voice interaction and can easily be mapped to separate intents that users can trigger via voice commands.

When interacting with a voice-enabled system, users expect consistency and predictability. If a user discovers the ability to add an item using voice commands, they naturally anticipate the same functionality for deleting or modifying it. This expectation came from a fundamental, intuitive logic: actions should follow a symmetrical pattern. Consequently, if users can add items to a list using voice, they should also be able to delete or edit those same items using voice commands.

Another common interaction users often expect is information retrieval. For instance, how often do we ask Siri about the weather? Users expect quick, concise answers. However, requests can also become more complex. A user might ask, "What's the weather this weekend?"

This introduces an additional layer of complexity, as the system must not only understand the question but also process contextual elements such as timeframes and specific locations.

Designing an effective voice interface requires it to handle such variations, ensuring that responses are both accurate and natural. The same approach applies to the Reminders app. Users should be able to request a simple list of reminders or more specific information, such as the upcoming reminders or the details of a particular task.

Another critical aspect of voice interaction is enabling users to navigate an app using only their voice. This creates a more accessible and efficient experience, particularly for those who need hands-free interaction. A well-designed voice navigation system should be intuitive and capable of understanding natural language variations. For instance, in the Reminders app, users should be able to say, “Show all my reminders” or “Open my work tasks” to navigate between lists or view specific reminders.

However, voice interaction may not always be preferred, especially in public or private settings where speaking aloud could feel intrusive. In such cases, a text-based approach provides a more discreet alternative. By integrating your app’s functions with the system with App Intents, you enable those functions to appear directly in Spotlight. For example, in the Reminders app, Spotlight can offer quick access to “Today”, “Scheduled” and “All” reminders, providing a more efficient way to find and interact with the app’s core features.

Additionally, actions like marking a task as complete can be further optimized by providing a self-contained experience through widgets. Widgets allow users to perform actions directly from the home screen or notification center without opening the app. This eliminates unnecessary navigation, reduces friction, and provides a smoother and more streamlined user experience.

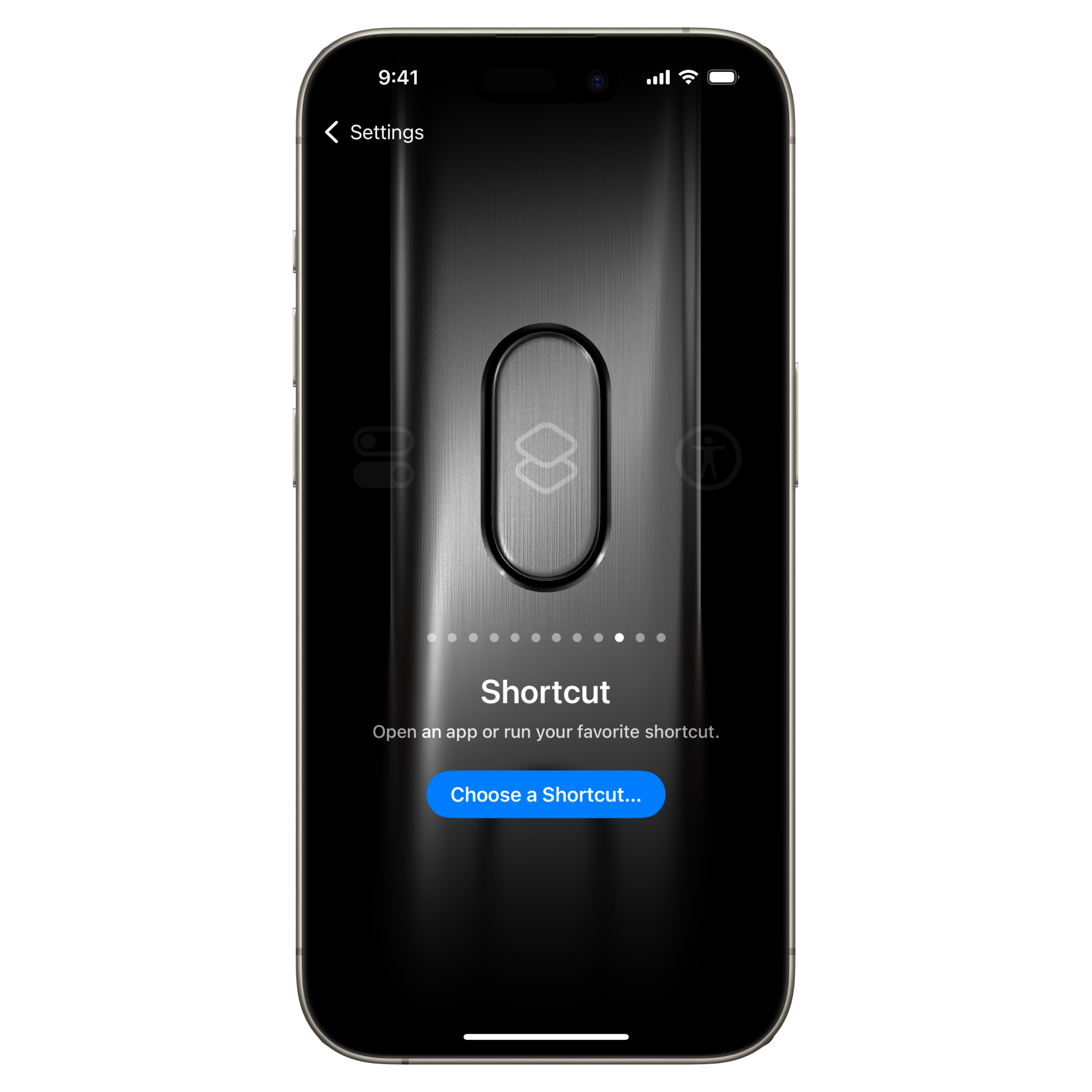

Apple is combining its powerful hardware and software to create a more intuitive interaction model. With hardware features like the Action Button, Apple is enabling users to perform specific actions without needing to navigate through menus or open apps. This hardware and software synergy provides users with faster, more direct access to the functions they need, improving overall efficiency and user satisfaction.

In this transition toward voice-first interfaces, developers must adapt to create seamless, intuitive experiences that align with these new expectations. By leveraging App Intents and designing thoughtful voice interactions, developers can ensure their apps stay ahead of the rapidly changing landscape.

In large projects, designers play a crucial role in prioritizing the features that should be exposed to the System first. This process should then encompass all the app’s features and data, adhering to Apple’s guidelines. To ensure great integration with the system and across different apps with new features powered by machine learning, every app component should be designed as an Intent.