Detecting hand pose with the Vision framework

Learn how to use the camera to detect hand pose within a SwiftUI app.

The Vision framework is equipped with a variety of powerful tools for visual data analysis, and one of the key areas is hand pose detection. By integrating Vision with camera feeds, developers can create apps that recognize hand poses in real-time, making it useful for gesture-based control or user interactions.

Vision has a native feature that allows to easily achieve such a hand pose detection. It uses machine learning to recognize key hand landmarks, which can then be used to specify what movements will be carried out. By the end of this article, you will understand how to implement such a feature in a SwiftUI application.

Hand anatomy

Our hands are incredibly complex, with twenty-seven bones, as well as multiple joints allowing for fine motor control. It is through these articulations and the palm that we are able to perform simple manual tasks such as waving or very complex movements such as typing or playing a musical instrument. The Vision framework's hand pose detection leverages this complexity, focusing on key joint points like the tips of your fingers, knuckles, and the base of your palm. By detecting the coordinates of these points it becomes possible to estimate even the most complicated hand pose providing a powerful tool for applications ranging from gesture recognition to user interaction.

Technological hand pose identification is a special branch of science that seeks the correctness and accuracy of a good number of attributes, which distinctly define the position of the hands and their potential undertaking objective. These attributes are based on the following:

- Bones and Joints: The hand contains five primary digits (the thumb and four fingers), each made up of several joints. Vision’s hand pose detection focuses on key joint points like the tips and intermediate joints of the fingers, which allow for articulation and movement.

- Fingers: each of these also has up to three segments called phalanges. The joints between these are:

- Distal Interphalangeal Joint (DIP): The joint closest to the fingertip.

- Proximal Interphalangeal Joint (PIP): The middle joint of each finger.

- Metacarpophalangeal Joint (MCP): The knuckle joint, connecting the fingers to the hand.

- Thumb: The thumb is divided, having only 2 phalanges and 2 joints:

- Interphalangeal Joint (IP): The joint between the thumb tip and the middle of the thumb.

- Carpometacarpal Joint (CMC): The base of the thumb, located near the wrist, which is very important for elongation of the thumb as well as grabbing something.

Example based on what was shown in Detect Body and Hand Pose with Vision (WWDC20)

W: WristCMC: Carpometacarpal JointMCP: Metacarpophalangeal JointIP: Interphalangeal JointPIP: Proximal Interphalangeal JointDIP: Distal Interphalangeal JointTIP: Tip of Finger

These joints are essential for movement and form the core of what the Vision framework detects. Apple's Vision framework can identify a total of 21 points in the hand: four joints for each finger and five for the thumb.

By using machine learning models, the Vision framework can recognize these points and track their movement in real time. This means you can detect everything from finger bends to the orientation of the entire hand—an essential tool for gesture-based interfaces or AR applications.

Hand Pose in a SwiftUI app

Hand pose detection in a SwiftUI project

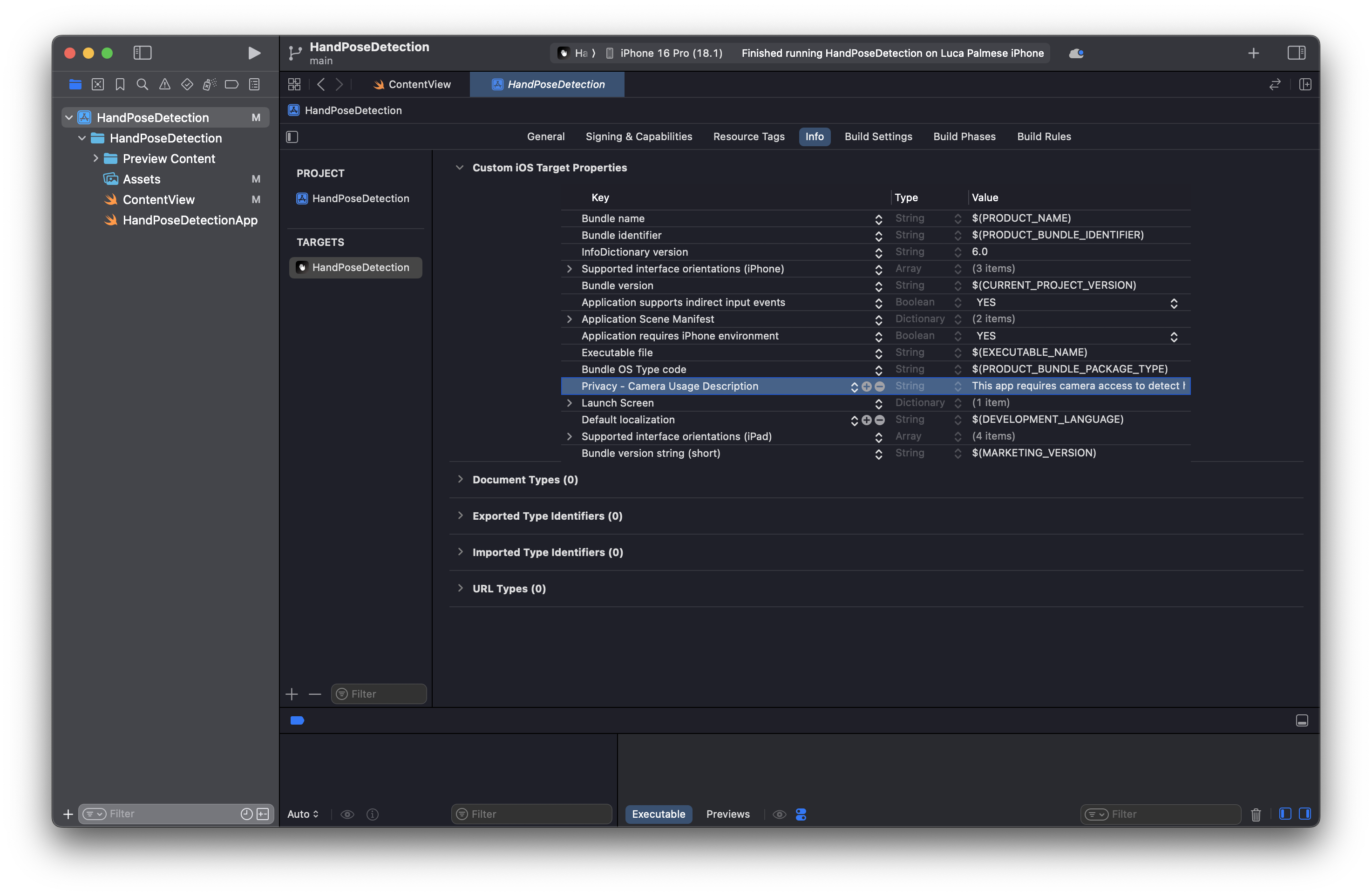

When creating a new SwiftUI project in Xcode that accesses the device’s camera always remember is to configure the app permissions to request user authorization for it.

In the app target, navigate to Info → Custom iOS Target Properties and add the key Privacy - Camera Usage Description (NSCameraUsageDescription) with a value explaining why your app needs camera access (e.g., "This app requires camera access to detect hand poses.").

Main Interface

With a clear understanding of how hand anatomy corresponds to what Vision detects, let’s break down the code that captures and processes hand poses.

In order to replicate what we achieved in the video above, implement the ContentView as follows:

import SwiftUI

import AVFoundation

import Vision

// 1. Application main interface

struct ContentView: View {

@State private var handPoseInfo: String = "Detecting hand poses..."

@State private var handPoints: [CGPoint] = []

var body: some View {

ZStack(alignment: .bottom) {

ScannerView(handPoseInfo: $handPoseInfo, handPoints: $handPoints)

// Draw lines between finger joints and the wrist

Path { path in

let fingerJoints = [

[1, 2, 3, 4], // Thumb joints (thumbCMC -> thumbMP -> thumbIP -> thumbTip)

[5, 6, 7, 8], // Index finger joints

[9, 10, 11, 12], // Middle finger joints

[13, 14, 15, 16],// Ring finger joints

[17, 18, 19, 20] // Little finger joints

]

if let wristIndex = handPoints.firstIndex(where: { $0 == handPoints.first }) {

for joints in fingerJoints {

guard joints.count > 1 else { continue }

// Connect wrist to the first joint of each finger

if joints[0] < handPoints.count {

let firstJoint = handPoints[joints[0]]

let wristPoint = handPoints[wristIndex]

path.move(to: wristPoint)

path.addLine(to: firstJoint)

}

// Connect the joints within each finger

for i in 0..<(joints.count - 1) {

if joints[i] < handPoints.count && joints[i + 1] < handPoints.count {

let startPoint = handPoints[joints[i]]

let endPoint = handPoints[joints[i + 1]]

path.move(to: startPoint)

path.addLine(to: endPoint)

}

}

}

}

}

.stroke(Color.blue, lineWidth: 3)

// Draw circles for the hand points, including the wrist

ForEach(handPoints, id: \.self) { point in

Circle()

.fill(.red)

.frame(width: 15)

.position(x: point.x, y: point.y)

}

Text(handPoseInfo)

.padding()

.background(.ultraThinMaterial)

.clipShape(RoundedRectangle(cornerRadius: 10))

.padding(.bottom, 50)

}

.edgesIgnoringSafeArea(.all)

}

}

- @State properties: There are two

@Statevariables. ThehandPoseInfoholds a message to indicate whether a hand pose has been detected or not, whilehandPointsis an array that stores the joint points of the hand, which we’ll use to draw on the screen. - ZStack: The ZStack helps layer views. First, we have the

ScannerView, which is responsible for the camera feed and hand detection. Above that, we draw lines between the wrist and the finger joints using SwiftUI’sPath. This gives a visual representation of the hand's skeleton. For your information, Vision allows you to detect many hand poses at the same time, but we chose to only visualize one for simplicity. - ForEach & Circles: This loop creates red circles at each detected hand joint, providing visual feedback for each finger joint detected.

- Text: A small text message shows the status of the hand detection, such as how many points were found.

Implementing the Scanner View

Since we need to capture the frames from the camera feed for detecting the hand poses, we decided to implement a ScannerView like follows:

import SwiftUI

import AVFoundation

import Vision

// 1. Application main interface

struct ContentView: View { ... }

// 2. Implementing the view responsible for detecting the hand pose

struct ScannerView: UIViewControllerRepresentable {

@Binding var handPoseInfo: String

@Binding var handPoints: [CGPoint]

let captureSession = AVCaptureSession()

func makeUIViewController(context: Context) -> UIViewController {

let viewController = UIViewController()

guard let videoCaptureDevice = AVCaptureDevice.default(for: .video),

let videoInput = try? AVCaptureDeviceInput(device: videoCaptureDevice),

captureSession.canAddInput(videoInput) else {

return viewController

}

captureSession.addInput(videoInput)

let videoOutput = AVCaptureVideoDataOutput()

if captureSession.canAddOutput(videoOutput) {

videoOutput.setSampleBufferDelegate(context.coordinator, queue: DispatchQueue(label: "videoQueue"))

captureSession.addOutput(videoOutput)

}

let previewLayer = AVCaptureVideoPreviewLayer(session: captureSession)

previewLayer.frame = viewController.view.bounds

previewLayer.videoGravity = .resizeAspectFill

viewController.view.layer.addSublayer(previewLayer)

Task {

captureSession.startRunning()

}

return viewController

}

func updateUIViewController(_ uiViewController: UIViewController, context: Context) {}

func makeCoordinator() -> Coordinator {

Coordinator(self)

}

class Coordinator: NSObject, AVCaptureVideoDataOutputSampleBufferDelegate {}

}

The ScannerView handles live video feed from the device’s camera. Here’s a breakdown:

UIViewControllerRepresentable: This is a protocol used to integrate UIKit components (likeAVCaptureSessionfor video capture) into SwiftUI.- Capture Session: An

AVCaptureSessionis created to manage the flow of data from the camera. It adds the video input (the camera) and the video output (processed frames). - Preview Layer: This layer shows the live video feed on the screen, making it easier to visualize what’s being captured.

Implementing the Coordinator Class

Finally, we extended the Coordinator class in the ScannerView to handle hand pose detection using Vision. Here is how we did it:

import SwiftUI

import AVFoundation

import Vision

// 1. Application main interface

struct ContentView: View { ... }

// 2. Implementing the view responsible for detecting the hand pose

struct ScannerView: UIViewControllerRepresentable {

...

// 3. Implementing the Coordinator class

class Coordinator: NSObject, AVCaptureVideoDataOutputSampleBufferDelegate {

var parent: ScannerView

init(_ parent: ScannerView) {

self.parent = parent

}

func captureOutput(_ output: AVCaptureOutput, didOutput sampleBuffer: CMSampleBuffer, from connection: AVCaptureConnection) {

guard let pixelBuffer = CMSampleBufferGetImageBuffer(sampleBuffer) else {

return

}

self.detectHandPose(in: pixelBuffer)

}

func detectHandPose(in pixelBuffer: CVPixelBuffer) {

let request = VNDetectHumanHandPoseRequest { (request, error) in

guard let observations = request.results as? [VNHumanHandPoseObservation], !observations.isEmpty else {

DispatchQueue.main.async {

self.parent.handPoseInfo = "No hand detected"

self.parent.handPoints = []

}

return

}

if let observation = observations.first {

var points: [CGPoint] = []

// Loop through all recognized points for each finger, including wrist

let handJoints: [VNHumanHandPoseObservation.JointName] = [

.wrist, // Wrist joint

.thumbCMC, .thumbMP, .thumbIP, .thumbTip, // Thumb joints

.indexMCP, .indexPIP, .indexDIP, .indexTip, // Index finger joints

.middleMCP, .middlePIP, .middleDIP, .middleTip, // Middle finger joints

.ringMCP, .ringPIP, .ringDIP, .ringTip, // Ring finger joints

.littleMCP, .littlePIP, .littleDIP, .littleTip // Little finger joints

]

for joint in handJoints {

if let recognizedPoint = try? observation.recognizedPoint(joint), recognizedPoint.confidence > 0.5 {

points.append(recognizedPoint.location)

}

}

// Convert normalized Vision points to screen coordinates and update coordinates

self.parent.handPoints = points.map { self.convertVisionPoint($0) }

self.parent.handPoseInfo = "Hand detected with \(points.count) points"

}

}

request.maximumHandCount = 1

let handler = VNImageRequestHandler(cvPixelBuffer: pixelBuffer, orientation: .up, options: [:])

do {

try handler.perform([request])

} catch {

print("Hand pose detection failed: \(error)")

}

}

// Convert Vision's normalized coordinates to screen coordinates

func convertVisionPoint(_ point: CGPoint) -> CGPoint {

let screenSize = UIScreen.main.bounds.size

let y = point.x * screenSize.height

let x = point.y * screenSize.width

return CGPoint(x: x, y: y)

}

}

The Coordinator processes each frame captured by the camera. It’s responsible for:

- Hand Pose Detection: The

VNDetectHumanHandPoseRequestis a Vision request that detects hand poses. Once the hand is detected, it looks for individual finger joints. For simplicity, we setrequest.maximumHandCountto 1, but it is possible to detect multiple hand poses in the same frame. - Recognized Points: It processes the results, finding the coordinates of the joints with confidence levels above 50%. The recognized points are then mapped to the screen’s coordinate system using the

convertVisionPoint(_ point:)function.This function takes the normalized coordinates (ranging from 0 to 1, where 0.5 is the midpoint) from the Vision framework and scales them to match the screen's dimensions in SwiftUI. The x- and y-coordinates are swapped in this transformation: the normalizedxcoordinate is mapped to the screen's vertical height, and the normalizedycoordinate is mapped to the screen's horizontal width.

Schema:- Normalized Vision Coordinates (0 to 1):

(x: 0, y: 0)→ Top-Left(x: 1, y: 1)→ Bottom-Right

- SwiftUI Coordinates (Width, Height):

xof Vision maps toyon screen (vertical scaling)yof Vision maps toxon screen (horizontal scaling)

- Normalized Vision Coordinates (0 to 1):

- Updating the UI: The detected points and status messages are sent back to the SwiftUI view, thus updating the display in real time.

Running the Application

Once the SwiftUI application is run, If a hand is detected, its key points are displayed to the user in the handPoseInfo variable in ContentView.

Being aware of the hand structure, and most importantly joints and bones they cannot function without, you will also understand how hand pose detection is accomplished. All the joints play a pivotal role in the natural motion as well as in tracking the movement using the Vision framework.

The framework’s ability to detect these specific landmarks is what makes it powerful for apps that involve natural user interfaces or gesture-based controls (visionOS can be a good practical example).

With this guide, you are now well-informed on how to implement Vision’s built-in hand pose detection into your SwiftUI application, but you can use this as a foundation to further build out this functionality with additional features.

Anyway, hand pose detection is just one of many powerful features offered by the Vision framework.

If you're interested in other uses, also check these other articles:

- Recognizing text with the Vision framework

- Classifying image content with the Vision framework

- Detecting the contour of the edges of an image with the Vision framework

- Removing image background using the Vision framework

- Scoring the aesthetics of an image with the Vision framework

- Reading QR codes and barcodes with the Vision framework