Identifying attention areas in images with Vision Framework

Learn how to use the Vision framework to pinpoint areas in an image that draw more attention.

Among the many features of the Vision framework, one of them is called Saliency Analysis. This feature analyzes an image and generates a heat map that highlights the areas in the image that are more likely to capture attention.

There are two types of saliency analysis ready to use in the Vision framework:

To perform an attention-based analysis use the GenerateAttentionBasedSaliencyImageRequest object to generate an image request that can be then performed using one of the variations of the perform(on:orientation:) method over an image.

@State var saliencyObservation: SaliencyImageObservation?

private func detectSaliency(on image: UIImage) async throws {

guard let image = image.cgImage else {

print("--- Could not load the image")

return

}

let request = GenerateAttentionBasedSaliencyImageRequest()

saliencyObservation = try await request.perform(on: image)

}

The result of the request is an object of the type SaliencyImageObservation. It contains a grayscale heat map of the important areas across the image analyzed. The highlights are the heatMap and the salientObjects properties.

With the heat map information, you can create an image overlay to represent the relevant areas visually.

struct HeatMapView: View {

var observation: SaliencyImageObservation

@State private var heatMapImage: CGImage? = nil

var body: some View {

Group {

if let heatMapImage {

Image(uiImage: UIImage(cgImage: heatMapImage))

.resizable()

} else {

Color.clear

}

}

.task {

self.processHeatMap(from: observation)

}

}

private func processHeatMap(from observation: SaliencyImageObservation) {

do {

heatMapImage = try observation.heatMap.cgImage

} catch let error {

print("---- Could not generate heat map image: \\(error.localizedDescription)")

}

}

}

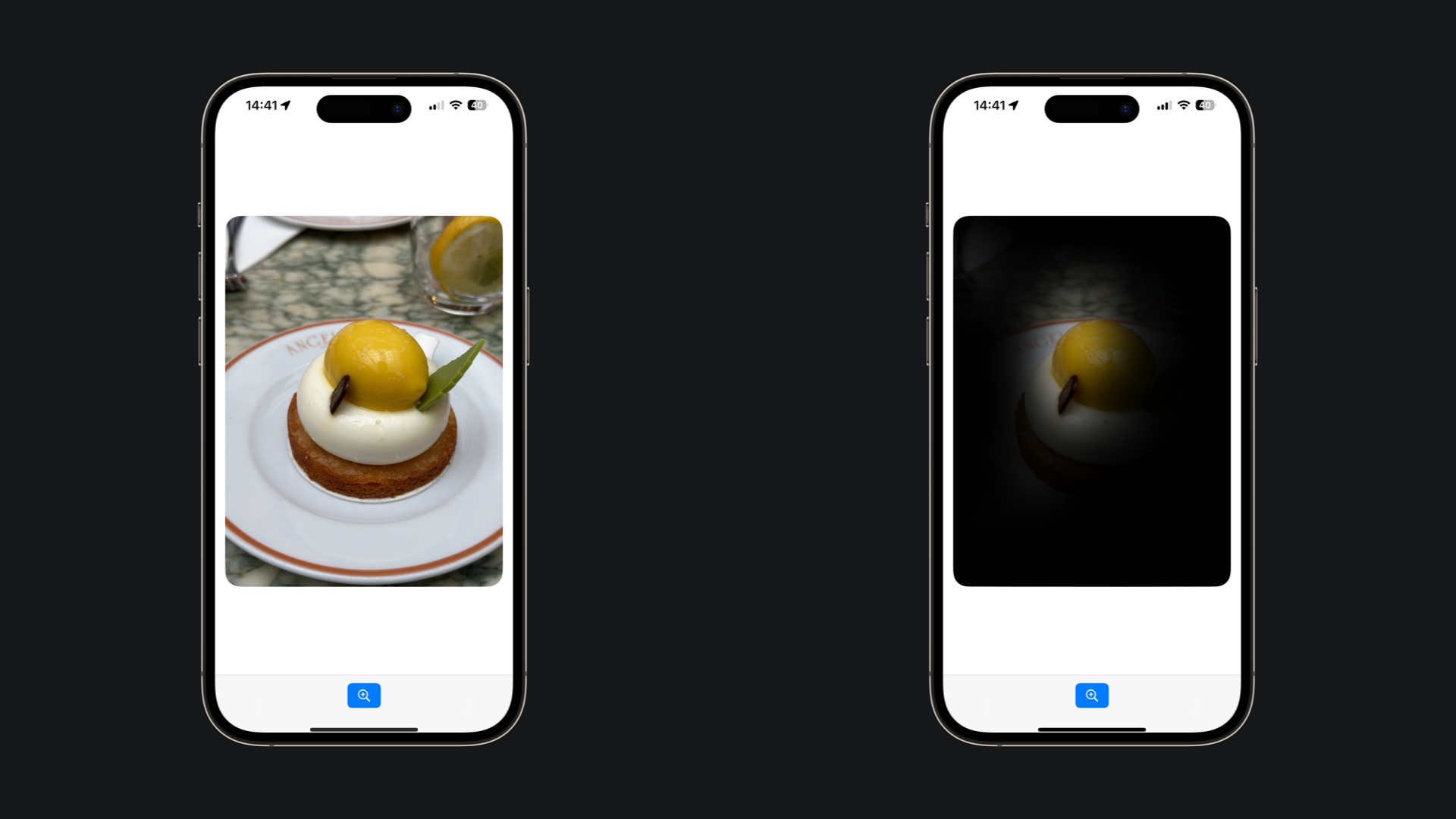

The following is an example of a SwiftUI view presenting the results of a saliency analysis. It presents an image and a button to trigger the analysis. Once it is finished the saliency observation is used to overlay the resulting heat map over the image with a multiply blend mode.

import SwiftUI

import Vision

struct ContentView: View {

@State var image: UIImage = UIImage(named: "sweet")!

@State var saliencyObservation: SaliencyImageObservation?

var body: some View {

Image(uiImage: image)

.resizable()

.scaledToFit()

// Overlaying the heatmap over the image analyzed

.overlay {

if let saliencyObservation {

HeatMapView(observation: saliencyObservation)

.scaledToFill()

.blendMode(.multiply)

}

}

// Aesthetic modifiers

.clipShape(.rect(cornerRadius: 20))

.padding()

// Button to trigger the analysis

.toolbar {

ToolbarItem(placement: .bottomBar) {

Button("Analyze image", systemImage: "sparkle.magnifyingglass", action: performAnalysis)

.buttonStyle(.borderedProminent)

}

}

}

private func performAnalysis() {

Task {

try await detectSaliency(on: self.image)

}

}

private func detectSaliency(on image: UIImage) async throws {

// Image to be analyzed

guard let image = image.cgImage else {

print("--- Could not load the image")

return

}

// Performing image analysis

let request = GenerateAttentionBasedSaliencyImageRequest()

saliencyObservation = try await request.perform(on: image)

}

}