Pioneering Spatial Computing Education: From iOS to visionOS

Advancing developers from iOS to visionOS through an interdisciplinary lens beyond interface design and development.

Introduction

This article explores how education can flourish through an interdisciplinary lens, integrating insights from architecture, industrial design, neuroscience, and psychology. Beyond programming and interface design, this evolution necessitates understanding human-centered dynamics—how users perceive, navigate, and interact with their environments.

In the following sections, we will uncover developers’ challenges adapting to this paradigm shift, explore innovative design perspectives such as Spatial Enablers, and introduce the Spatial Computing Education Matrix—a guide to fostering technical expertise, cognitive understanding, and creative adaptability curriculum. By embracing this multidimensional approach, we aim to prepare a new generation of professionals for a spatially integrated future.

One Year of Spatial Computing

A year has passed since Apple introduced visionOS, the latest operating system for Apple Vision Pro, marking the dawn of a new era in immersive technology. While the potential for transformative applications has captivated developers and users alike, this first year has also revealed significant challenges in designing meaningful and innovative experiences.

Unlike Extended Reality (XR), which prioritizes fully immersive environments, Spatial Computing focuses on seamlessly merging the digital and physical worlds. This approach reimagines everyday life, embedding dynamic, adaptive digital tools into our domestic and professional spaces. As we reflect on this initial year, we must consider the strides made and the hurdles still to be overcome in reshaping how we design and interact with technology.

Spatial Computing: Spatial computing is a broader concept that encompasses both AR and VR but goes beyond them. It refers to the use of technology to understand and interact with the physical world in a spatial context. Spatial computing combines the real and virtual worlds, allowing users to perceive and interact with digital content within their physical environment. It involves technologies like computer vision, sensors, tracking systems, and spatial mapping to seamlessly integrate digital and physical elements.

From Yenduri, Gokul & Reddy, Praveen & Maddikunta, Reddy & Gadekallu, Thippa & Jhaveri, Rutvij & Bandi, Ajay & Chen, Junxin & Wang, Wei & Shirawalmath, Adarsh & Ravishankar, Raghav & Wang, Weizheng. (2024). Spatial Computing: Concept, Applications, Challenges and Future Directions.

This shift in perspective requires a deeper understanding of how users perceive, interact with, and respond to digital content in their environment, not only with their eyes but also with their voices and hands. As technology advances, the educational system supporting it must evolve accordingly. After a year, how have educational systems evolved to meet the changing demands of Spatial Computing?

In the context of education, Apple adopts a multifaceted approach to promoting its technology and engaging developers worldwide; this strategy focuses on cultivating a robust developer ecosystem, providing educational resources, and creating opportunities for collaboration. These are some of Apple's touch-points for developers:

The Apple Developer Academy. Apple offers dedicated training programs for aspiring developers in various locations worldwide.

Across the world, whether students are in Naples, Italy, or Jakarta, Indonesia, academy participants learn the fundamentals of coding as well as core professional competencies, design, and marketing, ensuring graduates have the full suite of skills needed to contribute to their local business communities.

[...] Apple offers two distinct training programs as part of the Apple Developer Academy: 30-day foundations courses that cover specific topic areas, including an introductory course for those considering app development as a career path, and a more intensive 10- to 12-month academy program that dives deeper into coding and professional skills.

From Coders, designers, and entrepreneurs thrive thanks to Apple Developer Academy, Apple.com

The Apple Developer Program. By joining this program, developers can access essential tools, resources, and documentation to create, test, and distribute apps for Apple platforms. This includes access to beta software, development frameworks like Swift and SwiftUI, and APIs for advanced technologies such as machine learning, augmented reality, and spatial computing.

The Worldwide Developers Conference (WWDC), held annually, is Apple’s flagship event for unveiling new software updates, development tools, and frameworks. The conference includes keynote presentations, hands-on labs, and developer sessions led by Apple engineers to help developers adopt the latest technologies.

Indeed, Apple provides online developer forums where developers can ask questions, share insights, and receive support from Apple engineers and the community. It also supports developers through tech talks and workshops, where developers interact directly with engineers and are guided on projects. The App Store offers global distribution and promotional opportunities, highlighting innovative apps. Learning platforms such as Everyone Can Code, and Swift Playgrounds encourage skill development for new and experienced developers.

By combining all these great efforts, Apple has spread awareness of its technologies and equipped developers with the knowledge and tools they need to innovate and contribute to the App Store ecosystem. Before the launch of Apple Vision Pro in February 2024, Apple employed a similar strategy to promote the development of apps on visionOS across all platforms.

The release of Apple Vision Pro immediately divided the enthusiast audience, sparking mixed reactions and debates about its potential and direction. There are numerous conflicting opinions—some adore it, while others are indifferent. One case in 500,000 sold units has changed a life forever: the unique case of Daniele Dalledonne.

Sì. Vision Pro mi permette di vedere meglio dei miei miei occhi naturali. […] Se domani volessi salire in bicicletta (questa cosa fate finta che non ve l’abbia mai scritta) e volessi ridurre la mia soglia di rischio e aumentare la mia fiducia nel muovermi in mezzo al traffico, dovrei indossare il Vision Pro per essere più sicuro di quando ci vado senza.

Yes. Apple Vision Pro allows me to see better than my natural eyes. […] If I were to ride a bike tomorrow (let’s pretend I never mentioned this to you) and wished to lower my risk threshold while boosting my confidence in navigating through traffic, I would need to wear Apple Vision Pro to feel safer than I do without it.

From Quando Apple ti cambia sul serio la vita: “Con Vision Pro riesco a vedere meglio che con i miei occhi”

One interesting piece of feedback came from Zoe Thomas and Aaron Tilley of The Wall Street Journal. In a measured analysis of their podcast, they stated that Apple’s Vision Pro faces difficulties in gaining traction. Indeed, developers are reluctant to create apps for the mixed reality headset, citing technical challenges and a limited user base that hinder its potential despite its advanced features.

[…] And with the Apple Watch, even though the App Store wasn't essential for the product, Apple said it had 10,000 apps five minutes after the release of the watch. So, there is quite significant growth with these other devices. But an important caveat here is that developing for the Vision Pro is a lot more technically tricky than developing these different devices in some ways. Creating 3D content requires a lot more effort and attention and just more assets, and it requires a lot more work than a simple iPhone app or Apple Watch app.

From Why Apple’s Vision Pro Is Struggling to Attract App Developers, Tech News Briefing FRIDAY, OCTOBER 18, 2024

Digesting this article from the Wall Street Journal raises many questions. Still, one stands out: could it be that young developers’ enthusiasm is being held back simply by financial concerns, or is it perhaps the frustration of developers struggling to understand how to design meaningful, purpose-driven applications for this device?

Also, how can it be that while tabloid press declares this product dead just for a fistful of clicks, an entire community of surgeons is thrilled by its potential and big tech players, including NVIDIA, have chosen to develop dedicated projects for it?

Identifying Possible Spatial Computing Challenges

Despite Apple’s groundbreaking efforts to position visionOS and Vision Pro as the next frontier of future technology, several key challenges remain—particularly in equipping developers with the skills and perspective to unlock their full potential. These challenges stem not from technological limitations but from gaps in education, interdisciplinary collaboration, and conceptual understanding. What might these challenges be?

Self-reference Industry. Outside of the Apple ecosystem and before the Apple Vision Pro launch, the Extended Reality (XR) industry operates as a tightly enclosed, self-referential bubble in which those inside intuitively navigate its complexities. In contrast, outsiders often find it difficult to gain meaningful entry. This insularity is particularly evident in the learning curve, which is relatively high and imbalanced from a cognitive and technological standpoint: either you’re genuinely (or weekly) interested, or you give up trying to understand.

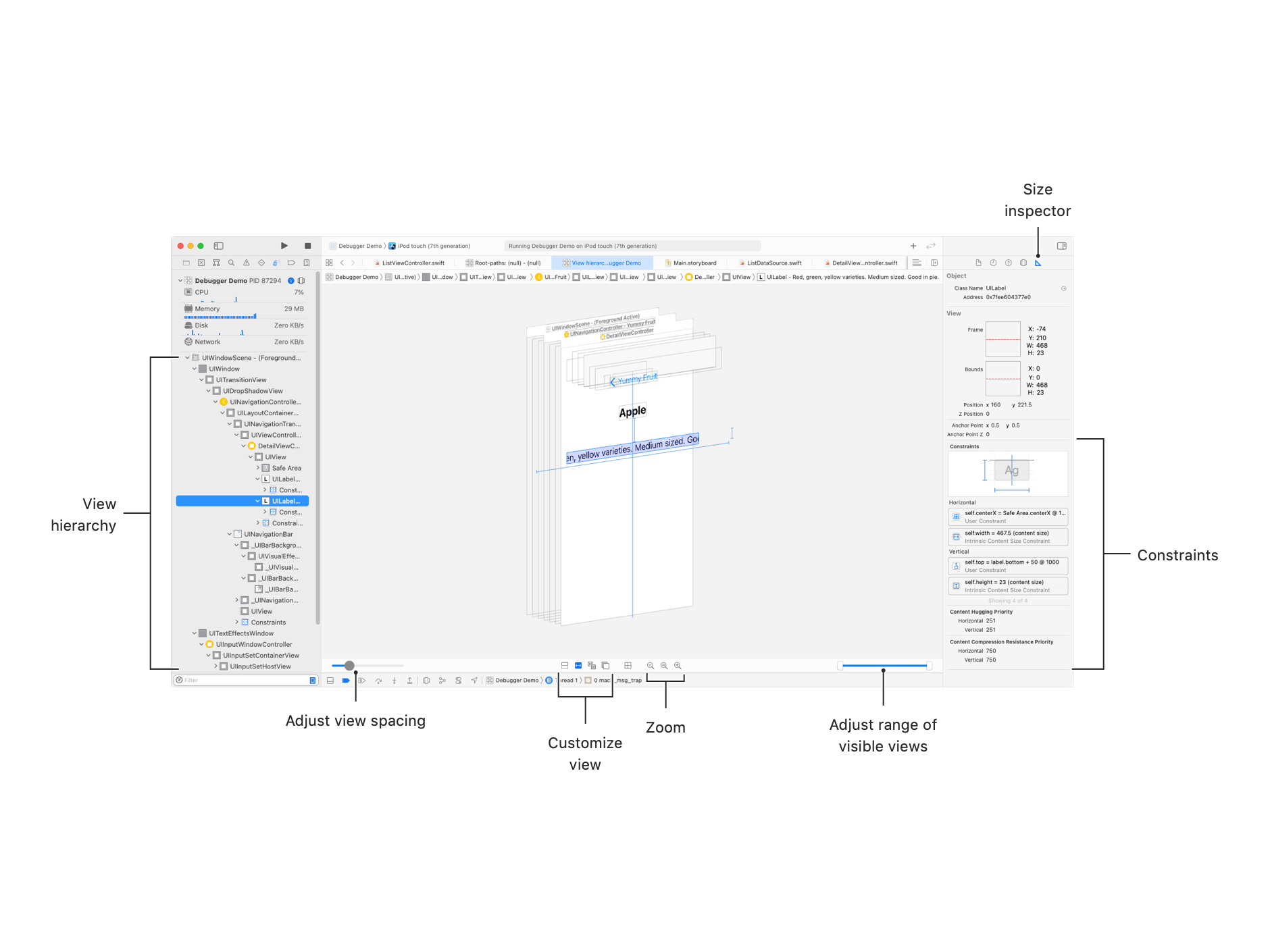

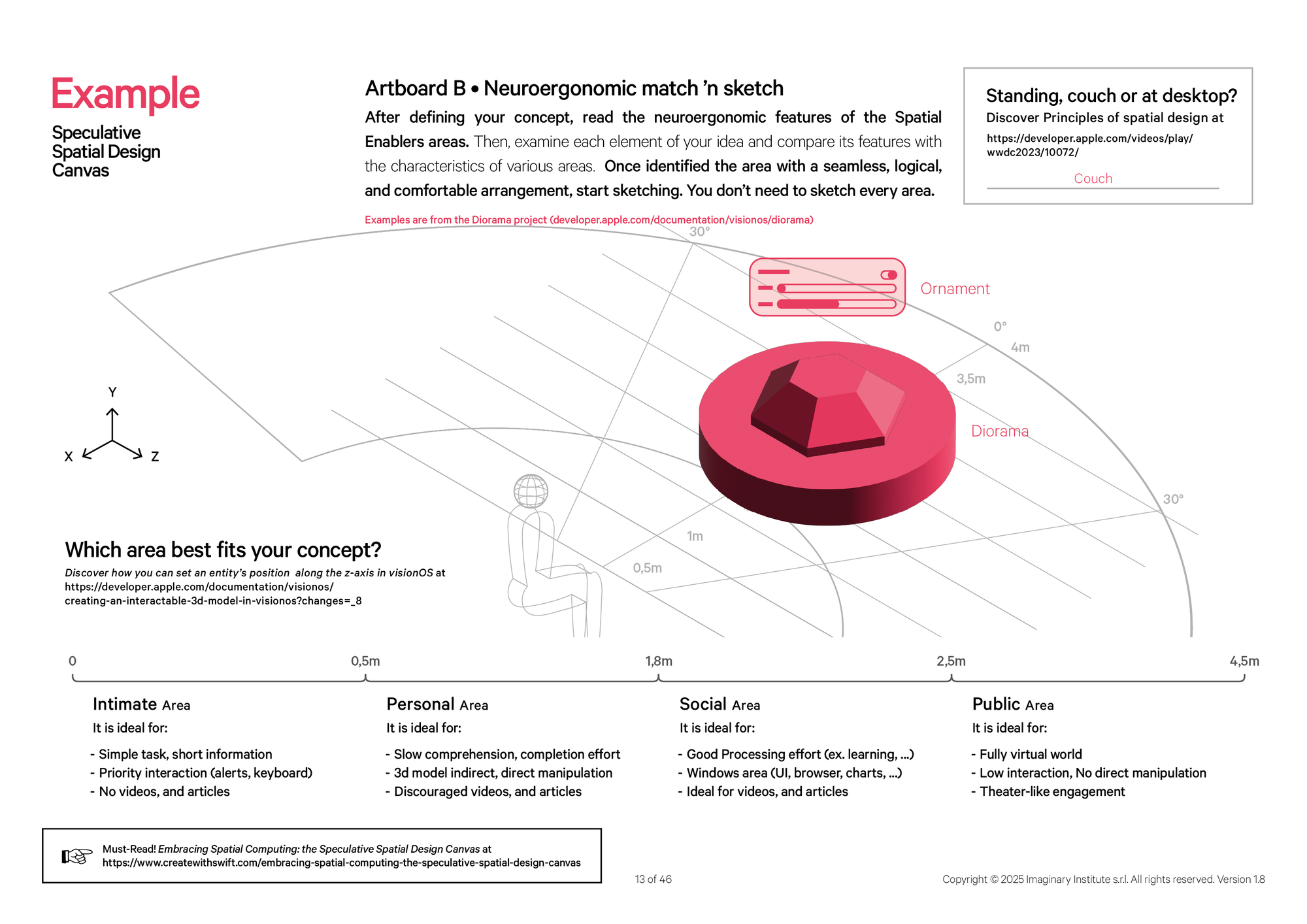

Developers’ Limited Interdisciplinary Skills. Transitioning from flat, screen-based app design to creating immersive spatial experiences requires more than just technical know-how. Developers must grasp three-dimensional design, spatial cognition, and neuroergonomics—skills often absent in traditional programming education. Without this foundation, even Apple’s Human Interface Guidelines fall short of addressing the unique complexities of Spatial Computing.

Something about design has been lost along the way. Apple’s historical focus on flat, 2D interfaces, dating back to the foundational work of Bruce Tognazzini, has created a legacy of user interface guidelines that fail to address the participatory and immersive nature of Spatial Computing. Designing for visionOS isn’t just about placing 3D objects in space; it’s about rethinking interactions and environments entirely.

In earlier versions of the Human Interface Guidelines (HIG), developers could engage with Basic Design principles rooted in the rich traditions of design schools such as the Bauhaus and the Ulm School and foundational thinkers like Munari and Anceschi. However, since the departure of Jony Ive from Apple, these principles have receded from prominence. Fortunately, remnants of this tradition can still be found on platforms like the Internet Archive Wayback Machine. While Apple’s HIG for visionOS has established a strong foundation, the HIG team appears to be prioritizing familiarity with the existing system and postponing addressing the unique challenges of Basic Design (or Design Foundation) for spatial experiences.

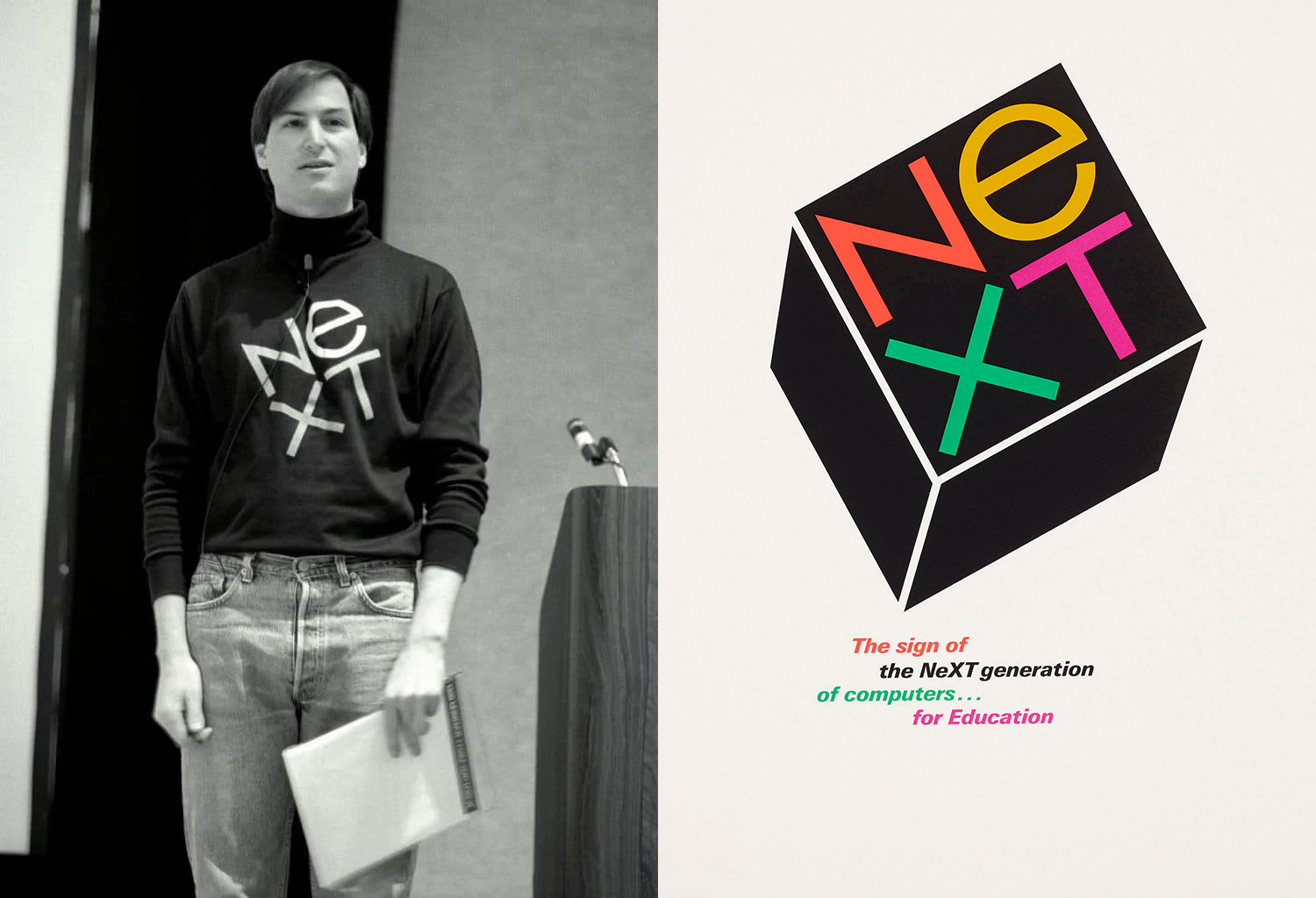

In the 1980s, Steve Jobs famously referred to the Macintosh as a bicycle for the mind, emphasising its role in amplifying human potential and productivity. This vision framed the Macintosh as more than just a computer—a versatile tool designed to cater to specific user needs. Over the past four decades, its role has changed significantly, evolving from a work-centric device into a flexible productivity, creativity and entertainment platform. In the late 1990s and early 2000s, the rise of the internet and multimedia technologies positioned computers at the center of entertainment, enabling activities like gaming, music streaming, and video playback. Then, with the introduction of the iPhone in 2007, mobile devices expanded the entertainment landscape, pushing computers to concentrate on more advanced productivity applications. This shift redefined the computer’s purpose, adapting to the dynamic needs of its users. This shift has redefined computing’s role, making it central to work and leisure.

But what’s the NeXT step?

Redefining Design Through Spatial Enablers

The leap from iOS to visionOS reflects a profound philosophical shift, redefining how we bridge the digital and physical realms.

Apple Vision Pro is a spatial computer that blends digital content and apps into your physical space, and lets you navigate using your eyes, hands, and voice.

Source: Apple Vision Pro User Guide

iOS introduced dematerialization—simplifying real-world processes like navigation, communication, and shopping into flat, intuitive interfaces. This abstraction removed physicality, prioritizing accessibility and convenience over spatial context. In contrast, visionOS acts as a materializer, reintroducing spatiality into digital interactions. By creating dynamic, 3D entities that coexist with our physical environments, visionOS enables a more intuitive and immersive connection to technology. These spatial objects are freed from weight, gravity, or collision constraints, yet they offer functionality and purpose that feel real and present.

| Aspect | iOS (Dematerialiser) | VisionOS (Materialiser) |

|---|---|---|

| Design Philosophy | Prioritizes minimalism and efficiency; reduces physical complexity into flat, navigable systems. | Prioritizes embodiment and spatial awareness; creates objects that coexist cognitively with physical reality. |

| Examples | Contact list instead of a phonebook; app-based compass; online shopping carts. | Duplicated archaeological sites; virtual surgery tools; interactive exercise machines; 3D design prototypes. |

| Nature of Interaction | Flat, screen-based interaction; processes are contained within a 2D interface. | Immersive, spatial interaction; tools and processes occupy and respond to the surrounding 3D space. |

| Philosophical Approach | Translates physical processes into digital interfaces, simplifying and abstracting reality. | Transforms digital processes into spatial objects, blending the advantages of real-world tools with digital freedom. |

| Physical Constraints | Removes physicality entirely; tools lose their spatial and tactile context. | Retains functionality while freeing tools from weight, gravity, collision, and physical permanence. |

| User Experience | Abstract and symbolic; focuses on accessibility and convenience. | Concrete and spatial; focuses on immersion and enhanced interaction with the digital environment. |

Designing such spatial entities demands an architectural mindset.

As architects consider how spaces shape human experience, spatial app designers must think beyond isolated functions to create cohesive, responsive environments. This calls for a fusion of utility, aesthetics, and personalization, ensuring that spatial entities serve practical purposes and enhance our relationship with the surrounding world.

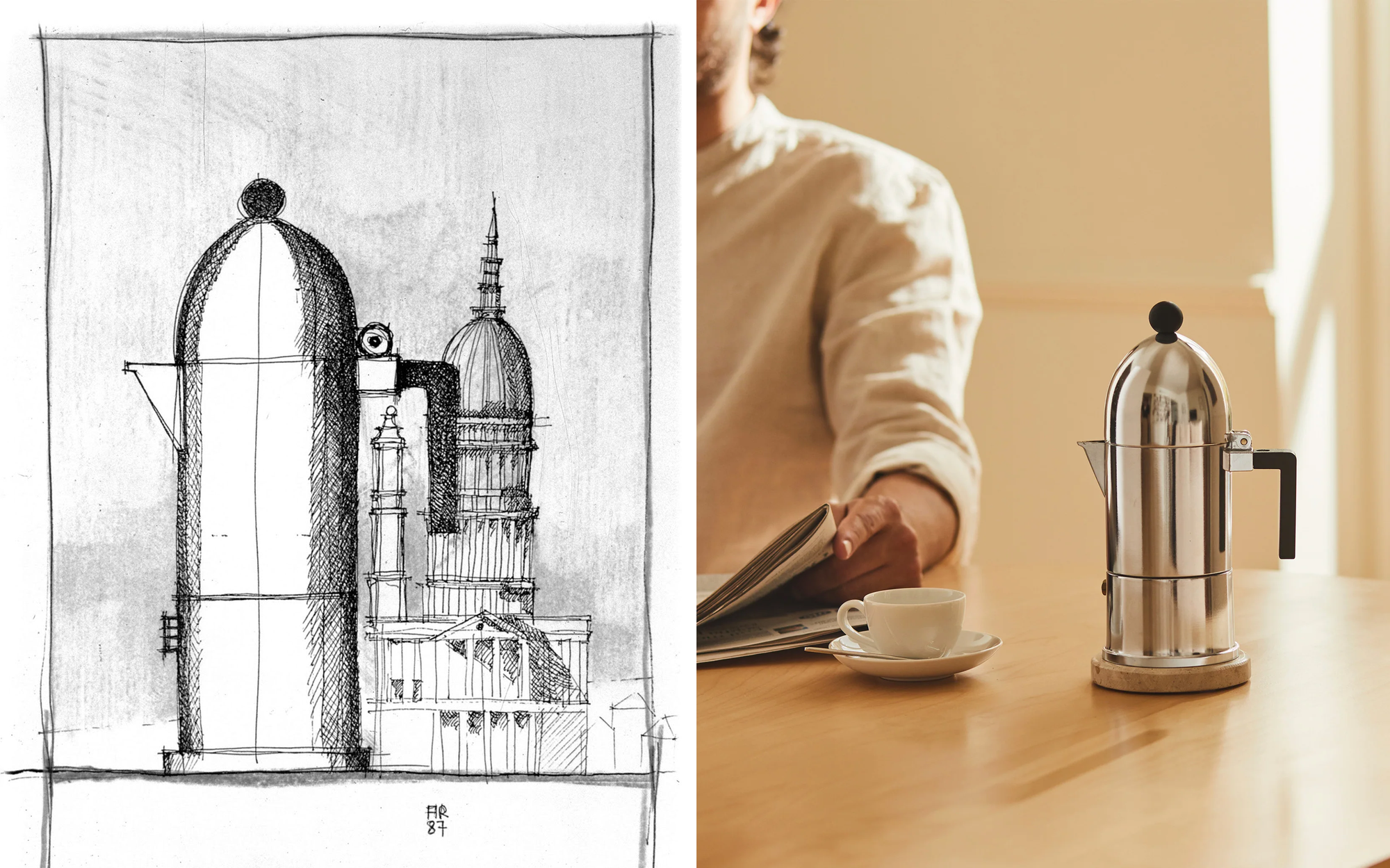

Even more, the vision of architects such as the Pritzker prize winner Aldo Rossi provides critical insights through his ability to blend formal rigor with a profound understanding of human experience. Rossi’s architectural theory, articulated in The Architecture of the City (1966), emphasizes the importance of collective memory and creating spaces that respond to individuals’ social and cultural needs. This philosophy aligns seamlessly with the principles required to teach Spatial Computing, where students must learn to design digital environments and grasp how these environments can dynamically interact with users’ cognitive and social contexts.

One of Rossi’s most significant architectural contributions was his Ideal City concept—this modular urban model balanced rigidity and flexibility—a principle that resonates strongly with Spatial Computing design. Just as Rossi envisioned a city where structures adapt to changing social needs, spatial applications must be designed not as static UI elements but as adaptive entities that respond to user behavior, environment, and interaction patterns. His approach teaches us that designing digital spaces is not merely about technical execution but also about creating environments that feel organic, intuitive, and deeply connected to human presence.

A tangible example of Rossi’s philosophy in practice is La Cupola, a domestic object inspired by the dome of San Gaudenzio in Novara, designed for Alessi. While it may seem distant from architectural theory, La Cupola exemplifies spatial reinterpretation—a classical form reimagined into a contemporary, functional object. Just as Rossi seamlessly merged tradition with innovation in his design of La Cupola, spatial computing education should foster a dynamic, adaptable mindset in developers, encouraging them to think beyond the conventional and embrace the fluidity of spatial design in both function and form. Materializing digital objects and processes in space allows us to interact with them more intuitively, fostering a closer connection between the digital and physical worlds.

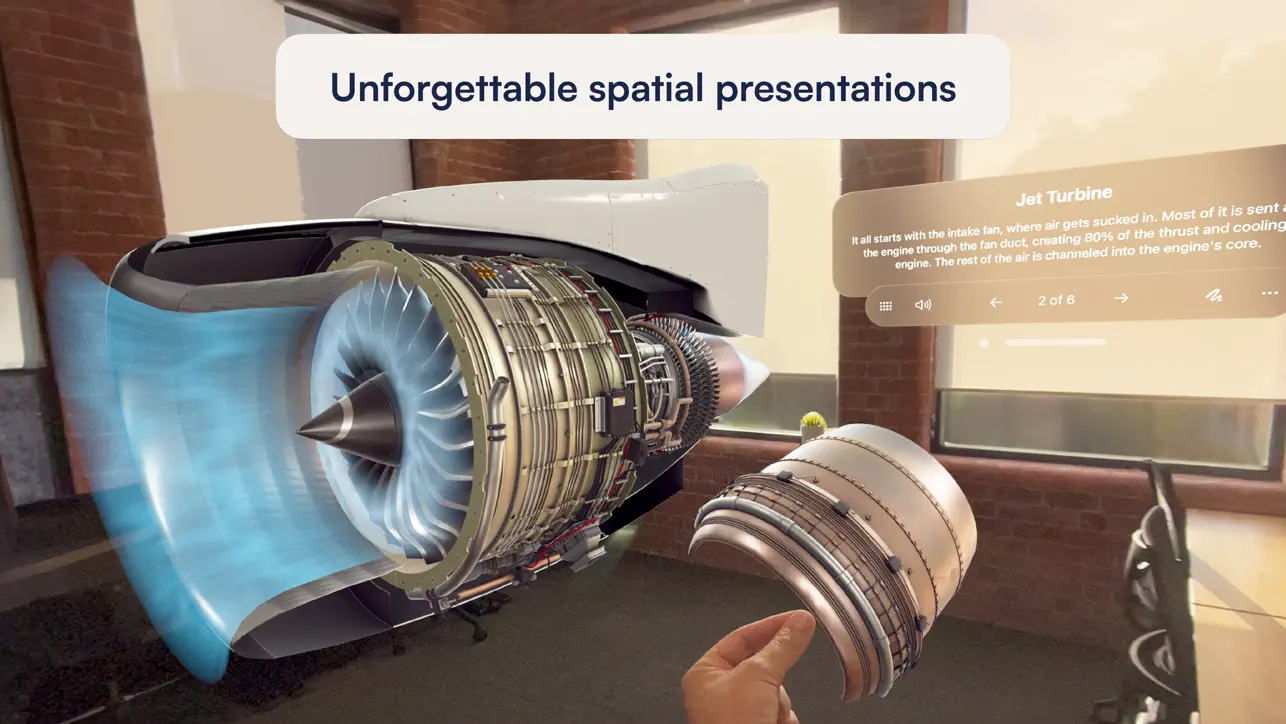

If iOS apps primarily engage users through buttons, sliders, switches, and typography, what do visionOS apps bring into the interaction landscape?

In traditional UX/UI design for apps, development teams focus on translating user-centred strategies into interfaces confined to a screen. Apps typically serve one of two purposes: they either provide a digital service, such as online shopping with Amazon or function as tools, like Apple Pay. These tools are bound by their two-dimensional, screen-based constraints.

In contrast, spatial apps redefine this paradigm by embedding entities directly into the user’s physical and cognitive space. Which domain usually explores how to design for space?

Spatial apps introduce entities into the user’s environment, making it impossible to overlook their design process through the lens of the Architect, Interior Designer, and Spatial Urbanist. Like an architect crafts living spaces or an interior designer curates furniture and objects to enhance daily life, spatial apps follow a similar principle.

They are designed not merely as tools but as adaptive, functional elements that enrich users’ interaction with their surroundings. These apps must be conceived as integral components of a cohesive spatial experience, blending utility, aesthetics, and personalization to improve the quality of the user’s digital and physical environment. This approach transforms spatial app design into a discipline that parallels the thoughtful creation of habitable, meaningful spaces. Traditional apps function independently of the user’s context, serving as isolated tools. On the other hand, they adapt dynamically to the user’s actions, adjusting to their environment and intuitively connecting with their awareness. This marks a shift from design focused on devices to interaction centered on humans.

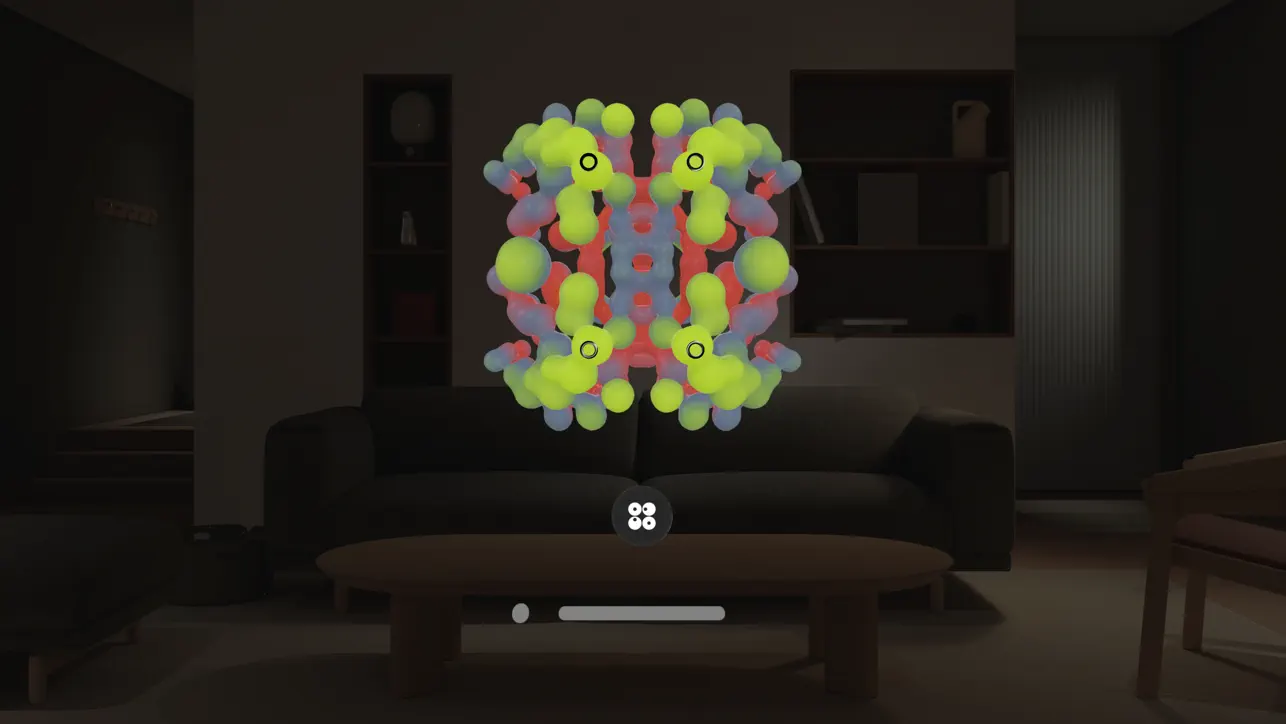

Over the past year of observing spatial apps, specific defining characteristics of spatial entities have become evident. In the Human Interface Guidelines, Apple presents the interactive content of visionOS in a particular way.

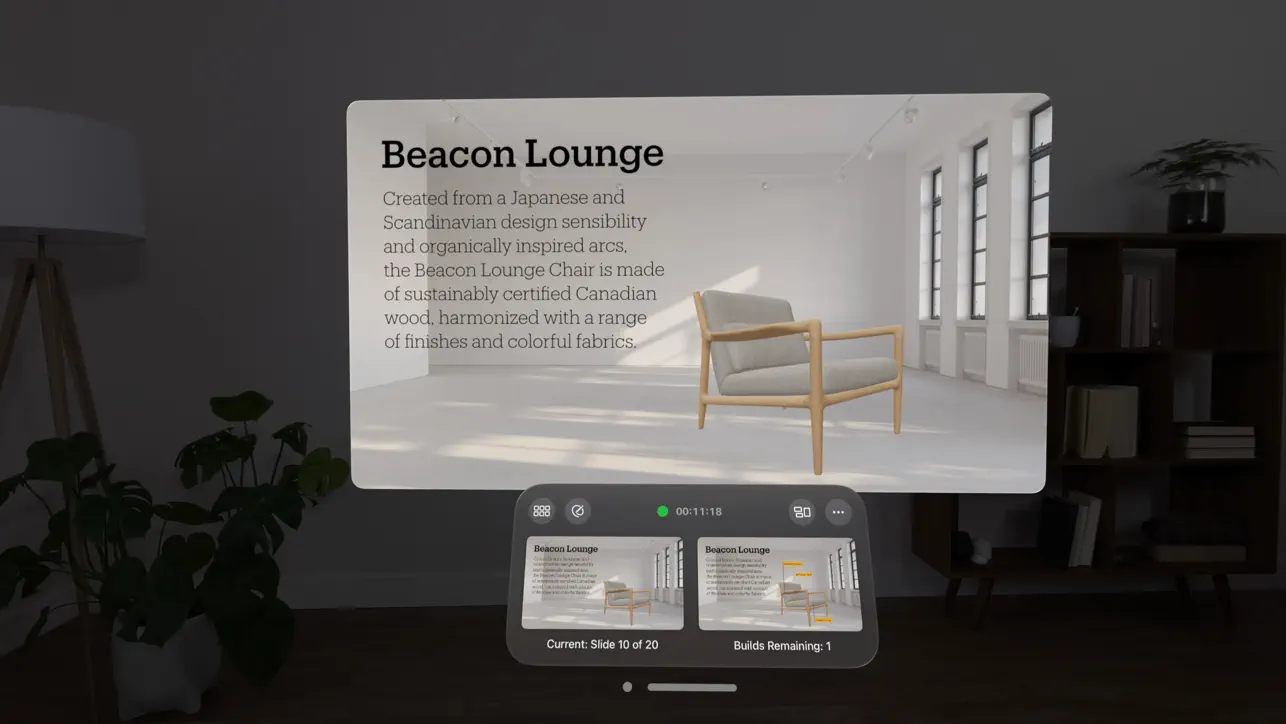

Apple Vision Pro offers a limitless canvas where people can view virtual content like windows, volumes, and 3D objects and choose to enter deeply immersive experiences that can transport them to different places.

Source: Human Interface Guidelines, Apple.com

But is this approach sufficient for a comprehensive understanding, or could we offer an alternative interpretation? Let’s analyze the most evident features of these named spatial entities.

Personal and Individual Relationship. Spatial entities exist within a private, personalized dimension, meaningful solely within the cognitive and visual space of the individual user. This intrinsic characteristic distinguishes them from shared objects, emphasizing a profoundly personal interaction.

Orbital Meaning and Dynamic Nature. These entities are inherently dynamic, orbiting the user in response to context and needs. Their movement and interaction mimic celestial bodies, symbolizing their adaptability to environmental, cognitive, and operational stimuli.

Functional and Symbolic Duality. Spatial entities are more than mere tools; they embody symbolic meanings, manifesting purpose and intention only through user engagement. This dual nature enhances their role in creating meaningful experiences.

Advanced Spatial Awareness. By leveraging AI and facial tracking, spatial objects interpret the user’s environment and intentions in real-time. This creates a highly adaptive and anticipatory interaction that aligns with the user’s needs.

Non-Intrusive Interaction. Spatial entities integrate effortlessly into the user’s cognitive space, maintaining a discreet yet impactful presence. They avoid overwhelming the user while delivering significant functionality.

Inter-Object Relationships. How spatial entities interact in multi-object environments—collaboratively or competitively—determines the user experience. Designing harmonious dynamics is essential to prevent cognitive overload.

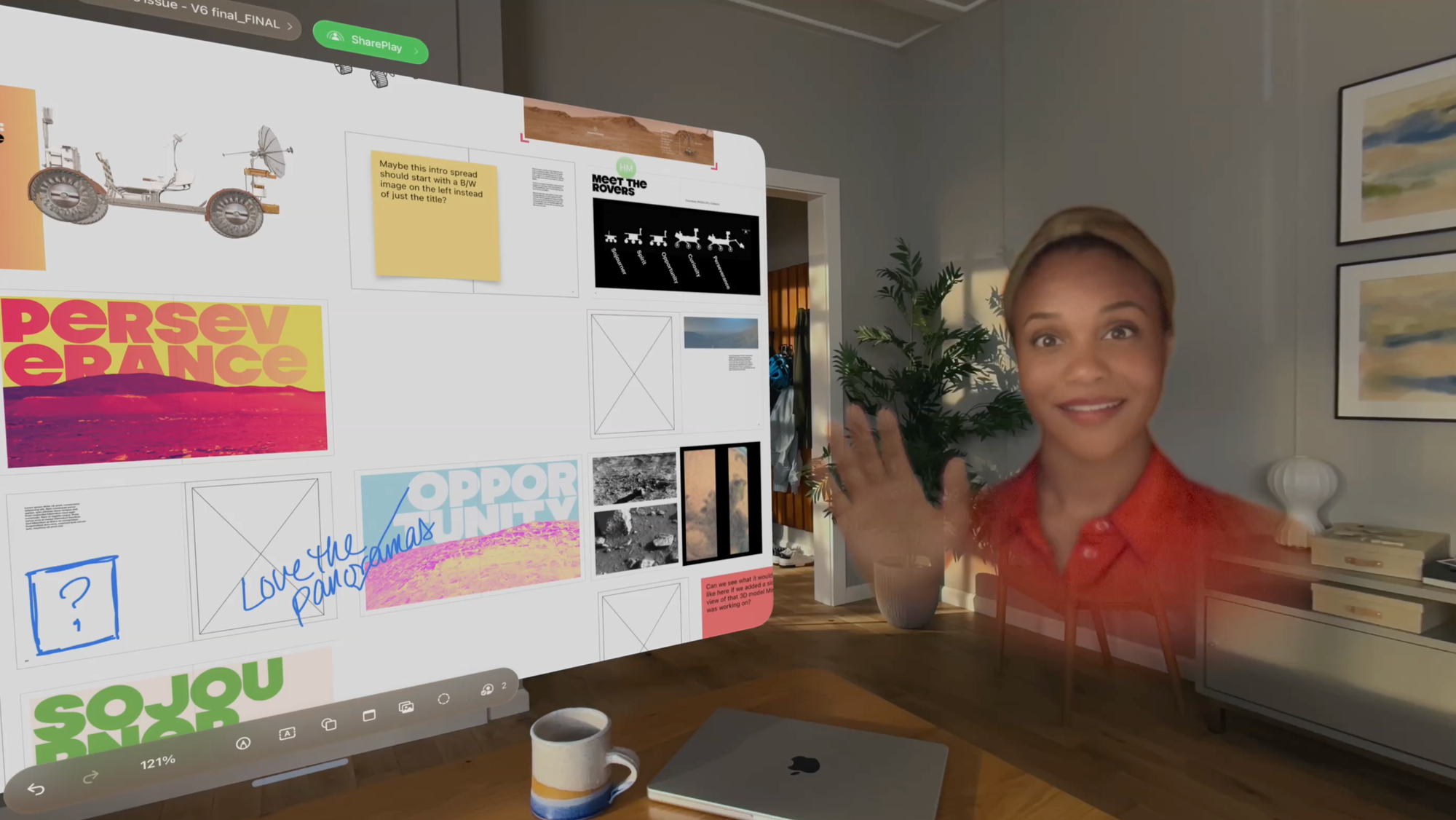

Social. Though inherently personal, spatial objects could carry broader social implications through shared experiences or connections, opening possibilities for collective engagement.

These virtual entities reside within the user’s cognitive and visual space, revolving around them like satellites. Unlike traditional apps confined to screens, Spatial entities interact dynamically with users in a three-dimensional setting. They are intangible yet real cognitively, influencing the user’s mental models without needing physical touch: unlike traditional tools or robots, spatial entities are collaborative partners by consent. They respond to user input, acting as extensions of the user’s intentions rather than independent agents.

A Spatial Object is a virtual, intangible entity that:

- Facilitates a spatial or cognitive experience otherwise inaccessible.

- Adapts its behavior to the user’s context, whether environmental or cognitive.

- Responds to commands without taking independent actions, respecting the user’s authority and control.

- Replaces physical limits by offering intangible representations that only exist in the user’s mind.

- Universal Accessibility. Designed for inclusivity, they support personalized interaction modes for users with different physical and cognitive abilities.

The concept of a spatial entity signifies a paradigm shift in the design of digital interactions.

Traditional iOS app design often centres on creating “UX/UI solutions to provide a specific service or tool (X) to a defined group of users (Y) on a flat display”. This approach positions applications as isolated utilities or tools.

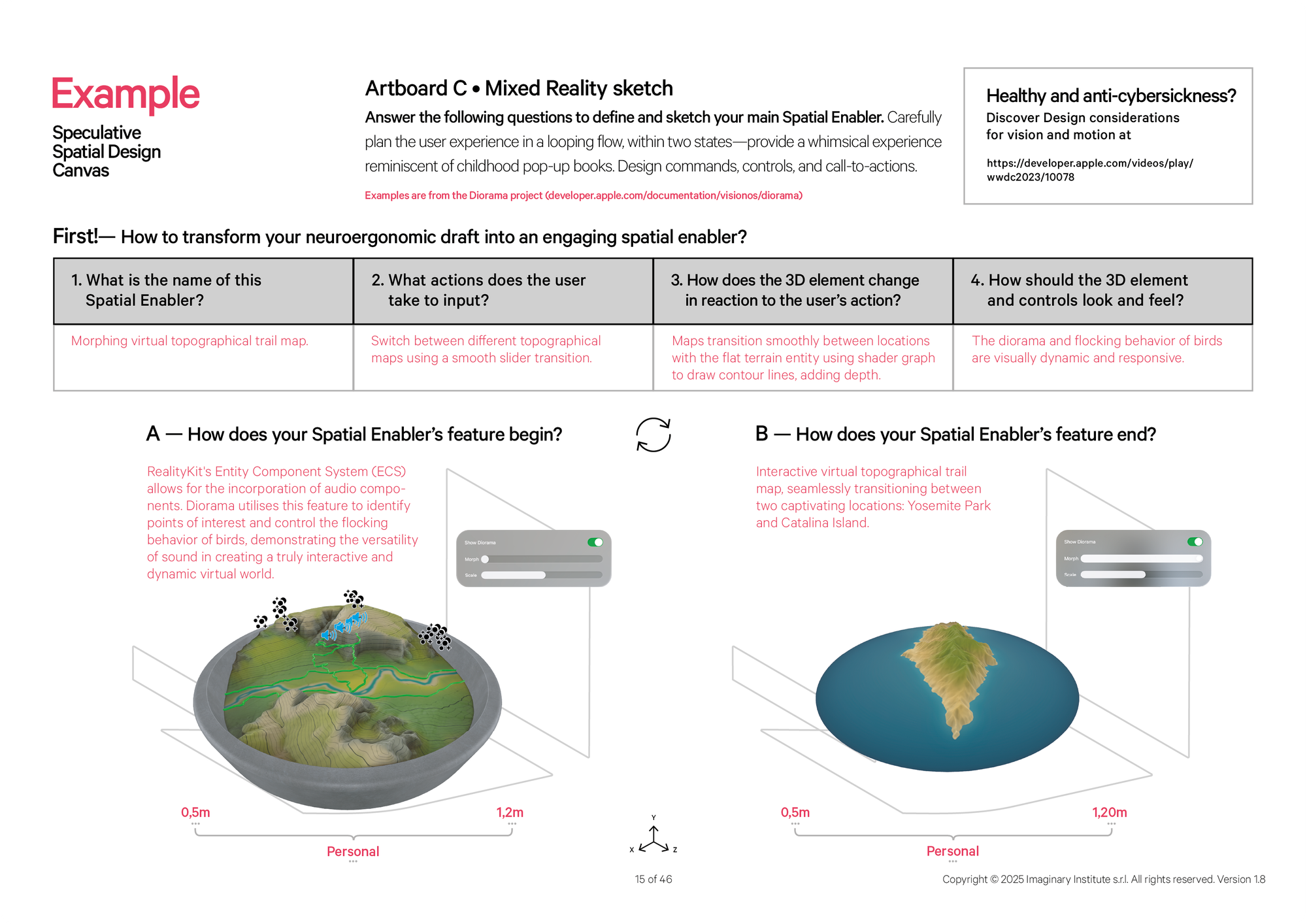

In contrast, visionOS shifts the design prompt to: “Create X Spatial Enablers that assist and support Y users in performing Z tasks within their physical environment.”

This reframing highlights the fluidity and adaptability of spatial experiences, where digital elements are embedded into the user’s surroundings and adjust to their unique context and needs.

| Aspect | iOS Apps (Traditional) | Spatial Enablers |

|---|---|---|

| Design Approach | UX/UI solutions to provide a specific service or tool (X) to a defined group of users (Y) on a flat display | Create (X) Spatial Enablers that assist and support (Y) users in performing (Z) tasks within their physical environment |

| Interaction Medium | Buttons, sliders, switchers, and touch gestures on a 2D screen | Gestures, gaze, voice, and dynamic spatial interaction in 3D space |

| Context Awareness | Limited; apps function in isolation, independent of the user's surroundings | High; adapts dynamically to the user’s physical and cognitive context |

| User Relationship | Tool-focused; user interacts with pre-defined interfaces | Collaborative; user co-creates with objects that respond and adapt |

| Environment Dependency | Confined to the screen of a device | Integrated into the user's physical and cognitive space |

| Functionality | Predetermined and static | Dynamic, personalized, and evolving over time |

| Visual Presence | Flat and screen-bound | Immersive and orbital, existing in the user’s spatial surroundings |

| Symbolic Meaning | Minimal; primarily utilitarian | Dual; serves both functional and symbolic purposes |

| Accessibility | Device-centric, requiring specific hardware interaction | User-centric, designed for inclusivity and universal adaptability |

The principles of architecture—understanding space, human interaction, context, and dynamic environments—are highly relevant for designing spatial experiences in the Apple Vision Pro. Architects bring a comprehensive perspective to the table, enabling the creation of purposeful and functional immersion experiences.

We can now assert that if iOS apps primarily engage users through buttons, sliders, switches, and typography, then visionOS apps consist of Spatial Enablers that allow users to perform tasks. But what impact does this new reality have on users’ minds?

A Spatial Shift Driven by Cognitive Psychology and Neuroscience

Philosophically, the idea of spatial truth extends beyond mere perception. In contexts such as surgery, the virtual representation of a patient’s veins on a mixed-reality interface can influence a surgeon’s emotional state, impacting their confidence and decision-making. Although not grounded in physical reality, this virtual truth can affect a practitioner’s performance.

Just as a surgeon may feel more confident about their decisions due to these enhanced representations, similar psychological effects can emerge in any situation involving spatial interaction. The brain, accustomed to interpreting information from our physical environment, adapts its expectations and trust based on what it perceives as spatially accurate, even if that truth is not rooted in the physical world.

From a psychological and neuroscience viewpoint, traditional interfaces have been flat, two-dimensional, and mainly tactile, influenced by the prominence of the smartphone’s touch screen. In contrast, spatial computing directly integrates into the user’s physical space. With devices like headsets that are worn rather than held, the device becomes invisible, fundamentally changing the nature of the interaction. This shift introduces complexity to the user experience, as interactions must consider spatial awareness, three-dimensional engagement, and seamless integration into the user’s environment.

This challenge arises from several factors:

- Established Mental Models. Developers and designers are used to creating interfaces that work on a flat, touchscreen surface. Moving to 3D spaces, where interactions go beyond taps and swipes to include gestures, eye movements, and spatial positioning, requires new ways of thinking and designing.

- Context Ambiguity. Unlike smartphones, which are stand-alone devices in the user’s hands, headsets like the Apple Vision Pro interact with the user’s environment and body. This makes spatial apps part of a larger dynamic ecosystem that must adapt to changes in the user’s surroundings. Interactions are less immediate and more abstract without a physical, tactile interface. Designing for invisible inputs, such as eye tracking, voice commands, and gestures, presents significant challenges.

- Balancing Immersion with Cognitive Load. Spatial applications, particularly in fields like medicine, must find a middle ground between presenting complex data and not overwhelming the user. Integrating virtual elements seamlessly should provide clarity without adding to cognitive load.

The concept of presence in extended reality is rooted in the brain’s cognitive and perceptual mechanisms: indeed, the brain reacts to spatial stimuli as if they were physically present. This level of engagement brings a new dimension to user interaction, evoking emotional responses like unease, fear, or excitement from virtual entities. Immersive environments are ripe with psychological triggers that evoke specific responses. Unlike traditional 2D interfaces, where interactions are confined to screens, visionOS engages users’ entire sensory and cognitive systems.

The Eyes and Social Challenges. The gaze is a powerful social signal. Eye contact, or its absence, plays a fundamental role in human interaction. In his exploration of the language of madness, Sergio Piro discussed how the eyes reflect complex social dynamics, especially in contexts of disconnection. In visionOS, virtual avatars, objects, or environments that fail to simulate human-like eye behavior can provoke discomfort or alienation. For instance, an object in the user’s field of view that “stares” without context might trigger unease, as the eyes are hardwired to interpret gaze direction as socially meaningful.

The Arrival of Masks: Piaget’s Child Psychology. Jean Piaget’s work on child development sheds light on how sudden, unexpected changes in familiar environments—such as the abrupt appearance of masks—can unsettle perception. A child encountering a masked figure often struggles to reconcile the familiar (a human face) with the unfamiliar (the mask). Similarly, in visionOS, when virtual objects or avatars appear without a natural progression, users may experience psychological dissonance. This disrupts the experience, breaking the cognitive flow required for immersive environments.

Anxiety and Existential Reactions. VisionOS’s immersive nature can evoke existential anxieties tied to human instincts and perception. In this context, anxiety is not limited to fear but extends to anticipating threats or disruptions in the user’s spatial or social environment.

Survival Instinct. The instinct to avoid predation is deeply embedded in human psychology. A stationary spider, for instance, might provoke a mild sense of unease, but a spider in motion often triggers a heightened sense of alertness or fear. In visionOS, similar triggers can emerge when objects in the user’s space suddenly move unexpectedly. An avatar or virtual entity that appears to “pursue the user can unintentionally evoke primal responses, disrupting the intended interaction flow.

Being Watched. The sensation of being watched is another potent psychological trigger. A visage that looks directly at the user without context can evoke discomfort, a phenomenon deeply rooted in social psychology. This sensation is amplified in immersive environments, where users feel as though they are physically present. Designing visionOS applications must account for this, ensuring virtual entities engage with users naturally and non-intrusively.

Understanding the psychological dimensions of visionOS underscores the need for a comprehensive educational approach.

The complexity of crafting immersive and human-centered experiences goes beyond technical proficiency; it demands a deep understanding of human perception, cognitive responses, and instinctual triggers. This intersection of technology and psychology highlights the necessity for a new framework that equips developers with the tools to design functional and meaningful spatial applications. The Spatial Computing Education Matrix addresses this need, offering a roadmap for integrating technical skills, human factors, and platform-specific innovations into a cohesive learning process. By fostering interdisciplinary expertise, the matrix empowers developers to navigate the nuanced challenges and push the boundaries of what visionOS can achieve.

The Spatial Computing Education Matrix

As we reflect on the transformative potential of visionOS and Spatial Computing, four pivotal challenges must be addressed to advance educational outreach and empower the next generation of developers. These challenges are not isolated hurdles but interconnected opportunities that define the evolution of this paradigm.

- Bridging the Gap in Flat Thinking Among Developers.

Developers must transition from traditional, two-dimensional design thinking to embracing spatial cognition and immersive environments. This leap requires a reimagining of core design and development principles. - Advancing Beyond Interfaces — Crafting Spatial Apps.

Spatial apps demand a departure from static interfaces to dynamic, adaptive systems that respond fluidly to users and their environments. This requires creativity, technical depth, and an understanding of user-centric design. - Acquiring Skills in System Architecture and Production.

To support the complexity of Spatial Computing, developers need proficiency in large-scale system design, real-time rendering, and interdisciplinary production workflows. These skills are essential for delivering seamless and impactful spatial experiences. - Pursuing ongoing personal development and intellectual growth.

Professional andragogy is essential to spatial computing education. It nurtures curiosity, open-mindedness, and engagement with diverse perspectives. It also cultivates focus and insight, transforming ideas into actionable, user-centric solutions.

The matrix provides actionable pathways for each challenge, emphasizing exploration through tools like cognitive psychology, architectural principles, and Apple frameworks. Converging these insights into practical applications equips learners to craft meaningful, user-centered spatial experiences. This framework represents more than a technical guide—it’s a call to embrace the interconnected disciplines shaping Spatial Computing. As we integrate technical, cognitive, and creative expertise, we move closer to realizing the full potential of visionOS.

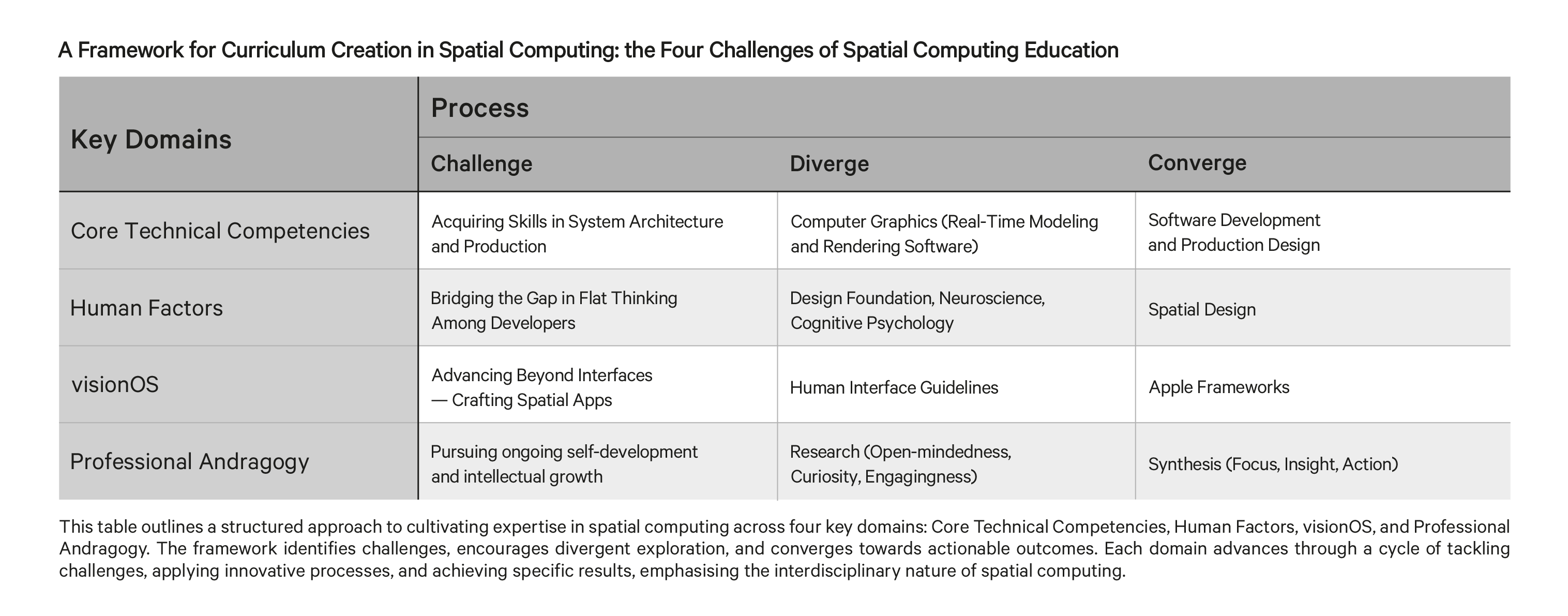

The Spatial Computing Education Matrix: A Blueprint for the Future provides a structured framework for addressing the educational hurdles in this field. It organizes them into four key domains: Core Technical Competencies, Human Factors, visionOS, and Personal Growth. Each domain identifies a specific challenge, explores a divergence strategy for broad exploration, and concludes with a convergence solution aimed at practical application.

The first domain, Core Technical Competencies, highlights the need to acquire foundational skills in system architecture and production, which are crucial for spatial computing. We encourage using computer graphics, real-time modeling, and rendering software to tackle this challenge. These exploratory tools are then translated into actionable outcomes in software development and production design, enabling a deeper technical understanding of spatial systems.

Human Factors focuses on the gap in traditional, two-dimensional thinking that many developers bring to spatial computing. To overcome this, the framework emphasizes integrating insights from design foundations, neuroscience, and cognitive psychology. These disciplines provide a richer understanding of how spatial interactions impact user experience. The ultimate goal is to transform this knowledge into practical spatial design solutions that enhance user engagement and interaction within immersive environments.

The visionOS domain shifts attention toward advancing beyond conventional interfaces. Here, the focus is on designing spatial apps that are immersive, context-aware, and aligned with the capabilities of visionOS. This process begins by applying human interface guidelines to explore innovative app design. It culminates in leveraging Apple’s frameworks, among all of Apple Intelligence, to effectively bring these applications into the ecosystem.

Regarding the essential role of Professional Andragogy in spatial computing development, traditional app developers, particularly those working on front-end solutions like user interfaces, often operate with only a surface-level understanding of the industry they serve—medical, engineering, or any other sector. This is because professionals, such as product owners or domain experts, are responsible for bridging the gap between technical execution and contextual relevance. Developers focus on their craft, leaving the heavy lifting of domain-specific knowledge to others. However, this approach is no longer sufficient in visionOS since Developers must create experiences that integrate seamlessly into the user’s environment and resonate deeply with the application's context. To achieve this, they must immerse themselves in their domain, adopt an interdisciplinary mindset, and be willing to step outside their comfort zone. As highlighted in Hollnagel’s exploration of Decision-Centered Design, modern systems must prioritize human cognitive and perceptual activities to support decision-making in dynamic environments (Erik Hollnagel. Handbook of Cognitive Task Design. (2003). Stati Uniti: CRC Press. P 388). This resonates with the demands of Spatial Computing, which requires systems to adapt to users’ cognitive needs seamlessly. Collaborating with domain experts or taking courses in relevant fields is necessary to create a purposeful learning experience.

For instance, a developer working on surgical applications for visionOS cannot rely solely on input from medical professionals. They must, in part, become medicine students—learning about surgical workflows, understanding the cognitive load of a surgeon, and appreciating the nuances of medical environments. This goes beyond technical competence; it requires intellectual curiosity and a readiness to tackle challenging, unfamiliar subjects.

Last, Apple’s educational initiatives have long embraced Challenge-Based Learning (CBL), a structured approach that guides learners through challenges with clearly defined objectives. When considering the unique demands of spatial computing, it’s worth reflecting on how this method can address the needs of learners engaging in such a complex and interdisciplinary field. CBL’s strengths lie in its ability to frame learning around real-world challenges, fostering problem-solving and collaboration. Also, given the open-ended nature of Spatial Computing—where solutions are not easily defined, and the field itself is still evolving—one should consider that the framework also accommodates ambiguity and creative exploration due to its open-ended framing of challenges.

The balance between guided objectives and opportunities for self-directed inquiry becomes crucial for learners, who often also benefit from diverse backgrounds and professional experiences. Spatial Computing’s reliance on fields as varied as architecture, cognitive psychology, and design suggests a flexible and exploratory approach is required. While many educational frameworks are available, it becomes crucial to understand how they meet the demands of such a dynamic domain. As universities and institutions navigate the complexities of Spatial Computing in their curriculum, they should explore how adjustments to existing learning methodologies help to strike a balance between structure and freedom.

While no definitive answers exist, this reflection underscores the need to continually adapt teaching strategies to align with pedagogical principles and emerging technology requirements.

Conclusions

Spatial Computing, epitomized by visionOS and the Apple Vision Pro, is more than a technological milestone—it’s a redefinition of how we interact with and design for the digital world. It challenges traditional paradigms of flat interfaces, introducing dynamic, spatially aware entities that adapt to and enhance human environments.

However, realizing this vision requires more than innovative tools; it demands a revolution in education. Developers must master technical skills and the cognitive and creative disciplines underpinning meaningful spatial design. In this context, with its inherent complexity and interdisciplinary demands, education requires maturity and professional experience that extends beyond traditional learning paradigms. This field does not merely challenge students; it fundamentally redefines the role of educators and their institutions, demanding their evolution to meet the needs of this transformative technology and to adapt the learning experience in the context of schools, universities and research innovation hubs.

It is not students but educators who must first grasp and master this new paradigm. Without adequately preparing instructors to teach the principles, any attempt to educate students is destined for chaos. Just as Extended Reality transcends conventional app development by immersing itself in the specific domains it serves, educators must also immerse themselves in this emerging field before effectively guiding their students.

We must equip teachers with the tools, knowledge, and confidence to navigate this paradigm shift successfully. Educators must unite, share their knowledge, and build a foundation for personal and professional growth. This involves teaching new skills and cultivating a culture of interdisciplinary learning and mutual empowerment.

To echo the wisdom of Seneca: Docendo discimus —by teaching, we learn.