The Touch Evolution: How Gestures Shape Our Digital Worlds

Explore how gestures impacted the way people interact with the digital world.

Gestures have become an integral part of our daily routines, enabling us to interact with digital environments more intuitively and naturally. This shift from traditional button-based inputs to fluid gesture-driven interfaces has significantly enhanced the user experience, simplifying complex commands and streamlining navigation.

This transformation of Human-Computer Interaction (HCI) is reshaping how users engage with digital systems and aligns with the HCI goal of creating more human-centered interfaces.

As a leader in HCI innovation, Apple is an excellent subject to observe to understand the dynamics and the correct approaches of gestures as it continues to expand the use of these kinds of interactions across its product range. Each device offers unique gestural interactions that optimize functionality and user engagement.

This kind of integration by developers and companies highlights a commitment to making technology more accessible and user-friendly, aligning with the broader goal of creating interfaces seamlessly integrated into daily life.

Gestures in everyday life

The concept of gestures is deeply rooted in human communication, serving as one of the earliest and most natural standards for expressing thoughts and emotions. From the spontaneous gestures of a newborn reaching out to its parents to the elaborate sign languages developed to simplify conversations, gestures have always been a fundamental part of human interaction.

Due to technology's rapid evolution and revolution over the last three decades, gestural communication has undergone a significant transformation. It has progressed from being only a form of human-to-human communication to now encompassing interactions between humans and digital devices.

The first formal definition of gestures was given by Kurtenbach and Hulteen in Gestures in Human-Computer Communication (1990):

A gesture is a motion of the body that contains information. Waving goodbye is a gesture. Pressing a key on a keyboard is not a gesture because the motion of a finger on its way to hitting a key is neither observed nor significant. All that matters is which key was pressed.

The nature of gestural communication between humans and devices is deeply embedded in human-to-human communication. By closely examining how people use gestures to communicate in everyday interactions, we can gain valuable insight into how this mode of communication has shaped and influenced human-machine interaction. Looking at this new way of communicating with digital devices over time, we can identify some essential milestones, like the transition from touch to multi-touch or the latest evolution with air gestures.

From touch to multi-touch

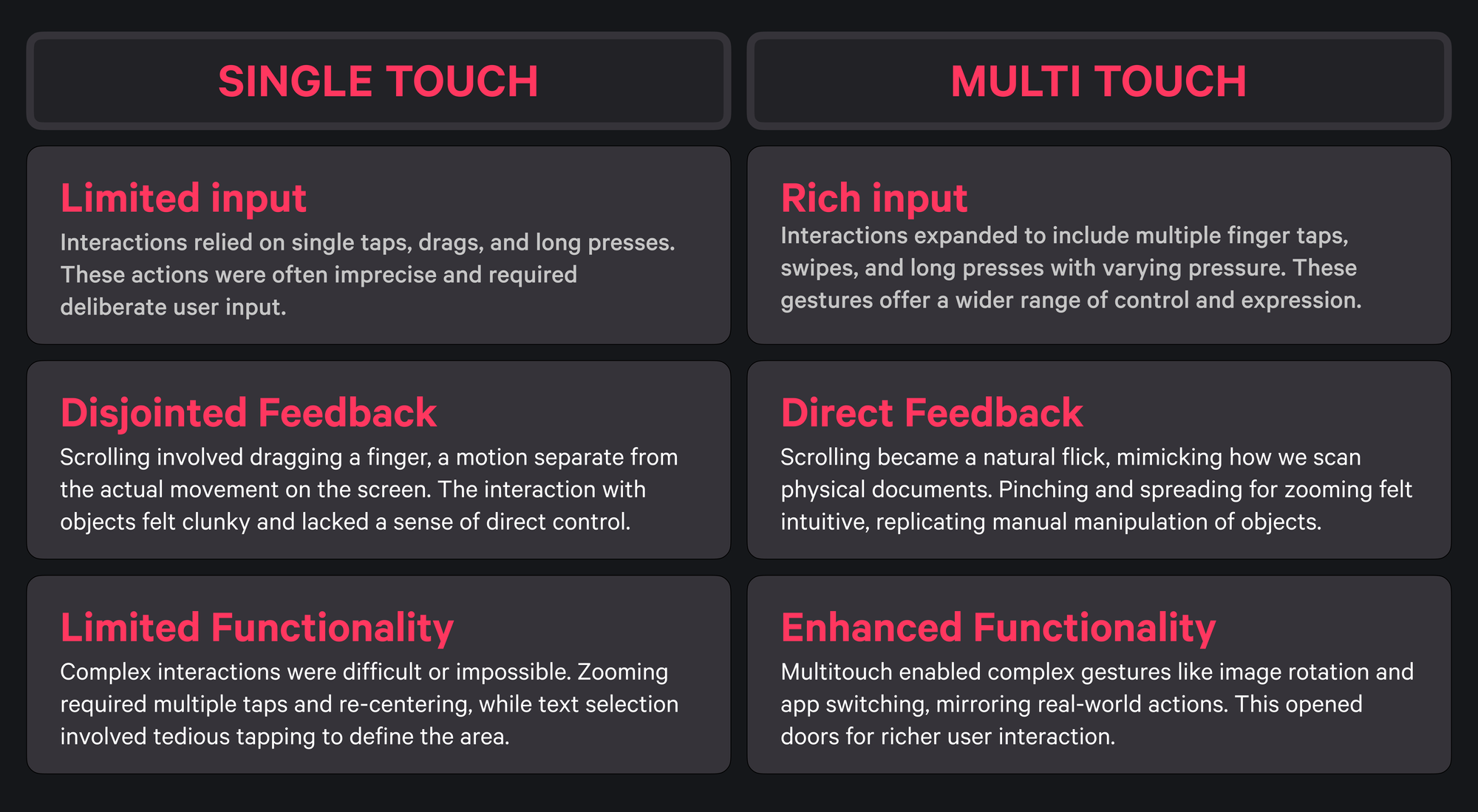

The transition from human gestures to technological applications began with the development of touch-sensitive devices. However, this method based on resistive technology was soon eclipsed by capacitive technology, which relies on the electrical conductivity of the human body to detect touch.

This shift not only improved the responsiveness of touchscreens but also allowed for more subtle and intuitive interactions that are closer to natural human gestures. This technological upgrade changed the way we interact with devices because it introduced multitouch technology. This technology opened doors for complex gestures that enhanced user experience, creating a sense of playfulness and delight in interacting with the device.

The main differences between single-touch and multiple-touch interaction are expressed below:

These gestures imitated natural human motions, making digital interactions feel more intuitive and engaging. Apple's introduction of the iPhone was a landmark moment in the popularisation of multitouch technology. It wasn't just a step forward in terms of technology; it showcased the potential of gestures to create interfaces that are not only intuitive but also deeply engaging, aligning closely with the natural ways humans move and communicate.

Classification of Gestures

As we navigate through digital environments and engage with physical spaces, gestures serve as a channel for expression, manipulation, and exploration.

These interaction models, far from being just movements, are infused with purpose and meaning and vary widely in their function and impact.

Understanding the diversity of gestures and their specific applications not only enhances our interaction with technology but also enriches our communication within social contexts.

Researchers categorize human gestures into three types: ergotic, epistemic, and semiotic. Understanding this helps developers and designers design more intuitive and effective human-centered technologies that meet natural human behaviors and preferences.

Ergotic Gestures: The Mechanics of Manipulation

Ergotic gestures are the workhorses of mobile interaction. They are the physical actions we use to directly manipulate digital objects on a screen. These gestures leverage the user's inherent motor skills to perform actions with minimal cognitive load.

Ergotic gestures include swiping left or right on a screen to navigate, tapping an icon to launch an application, pinching to zoom in/out on images for detailed inspection, and long pressing on an element to reveal contextual menus.

Ergotic Gestures

UX Considerations:

- Standardization is key:

Maintaining consistency in ergotic gestures across different app sections and functionalities reduces the learning curve and creates a predictable user experience. - Simplifies the introduction of gestures:

New or complex gestures might benefit from onboarding tutorials or subtle visual cues to guide users. This helps with gesture discoverability. - Balancing precision and speed:

Overly sensitive recognition or difficulty in accurate gesture recognition can lead to frustration.

Accessibility Evaluation:

- Motor limitations:

Users with tremors, limited dexterity, or missing limbs might struggle with precise or multi-touch gestures. Consider offering alternative interaction methods like voice commands or stylus support. - Target size:

Ensure that tappable elements (buttons, icons) are large enough to be easily targeted by users with motor impairments or visual limitations. - Gesture recognition accuracy:

The system should accurately recognize intended gestures and minimize false positives (accidental triggers) or negatives (missed gestures).

Epistemic Gestures: Unveiling the Digital Landscape

Epistemic gestures go beyond basic manipulation. They are all about exploration and information gathering, aiding users in understanding the complexities of the digital environment. Imagine using your fingers to examine a physical object, epistemic gestures serve a similar purpose in the digital world.

Examples are: the Multi-touch gestures like two-finger rotation enable users to manipulate 3D objects on the screen, allowing for in-depth exploration from different angles. This is particularly valuable in domains like design, engineering, or educational apps showcasing 3D models.

Epistemic Gesture

UX Considerations:

- Clarity of information:

Ensure that information revealed through epistemic gestures is relevant, concise, and directly related to the user's exploration intent. - User discovery:

Consider using subtle visual indicators to suggest the availability of epistemic gestures on specific UI elements. - Context-awareness:

Tailor the information revealed through epistemic gestures to the specific context of the user's interaction.

Accessibility Evaluation:

- Cognitive limitations:

Users with cognitive impairments might struggle to understand complex gestures or the information revealed through them. Consider offering alternative information access methods like text descriptions or audio cues. - Visual limitations:

Ensure that visual cues indicating the availability of epistemic gestures are accompanied by non-visual cues (like audio notifications) for users with visual impairments. - Information clarity:

The information revealed through epistemic gestures should be presented in clear, concise language and avoid complex terminology.

Semiotic Gestures: The Power of Symbolism

Semiotic gestures bridge the gap between verbal and non-verbal communication in the digital world. They carry symbolic meaning and can express emotions or social cues within an app interface.

Semiotic Gestures

UX Considerations:

- Cultural sensitivity:

Be cautious when using semiotic gestures, and prioritize universally understood symbols where possible. - Reflect the user's intent:

Ensure that the semiotic gesture aligns with the intended user action or emotional expression.

Accessibility Evaluation:

- Cultural context:

Users from different cultures might misinterpret the meaning of semiotic gestures. Consider offering explanations or alternative ways to express emotions or actions.

Why Use Gestures?

The Case for Natural Interaction

Incorporating gestures into user interfaces is a growing trend that offers a range of ergonomic benefits. By reducing the need for repetitive clicks and taps, gestures minimize the physical strain on users' hands and fingers, making interactions more comfortable and less tiring. Moreover, the use of gestures is a natural fit for the human body's movements, which makes interactions more intuitive and engaging.

The use of gestures also enables users to perform tasks faster and more efficiently, which can be especially important for high-stress environments where speed and accuracy are essential. Overall, the adoption of gestures in user interfaces has become an important consideration for designers and developers seeking to create intuitive, accessible, and user-friendly experiences that meet the needs of a wide range of users.

User Experience and Accessibility

Gestures can benefit users' experience by reducing the cognitive load required to interact with digital devices. By mimicking natural human motions and leveraging familiar actions, gestural interfaces can decrease learning time and enhance usability. In addition, these types of gestural interfaces enhance productivity and efficiency in different fields, from gaming and entertainment to medical and industrial applications.

Recent research in haptic technology and tactile feedback has focused on improving the gestural interfaces to provide better guidance and confirmation of actions. This is particularly important for users who rely on sensory feedback, such as those with visual or hearing impairments. Looking just at interactional habits, gestures have revolutionized the way people with disabilities interact with technology, allowing them to use their devices more efficiently and independently.

Nowadays, gestures are integrated with other assistive technologies, such as VoiceOver and eye-tracking, creating multimodal systems that cater to a broader range of impairments.

For those who are interested in VoiceOver, we recommend reading the article below:

These integrations ensure that the user experience is inclusive and can help users with different needs in terms of interaction. As for assistive technologies, other innovative systems have been developed to recognize and adapt to the physical capabilities of users.

Conclusion

The future of gesture technology, enriched by AI and machine learning, promises interfaces that are even more intuitive and adaptive. Emerging technologies will likely include context-aware gestures and emotionally responsive systems, which will tailor interactions based on the user’s immediate environment and emotional state.

As we explore the full potential of gestures, their role in shaping future digital interactions is undeniable. Embracing thoughtful gesture integration and continual innovation will be key to enhancing user engagement and satisfaction.

As developers and designers, our challenge is to envision and implement gestural interfaces that are not only intuitive but also inclusively designed, ensuring accessibility for all users.