Using an Image Classification Machine Learning Model in Swift Playgrounds

By the end of this tutorial you will be able to use an image classification Core ML model in Swift Playgrounds.

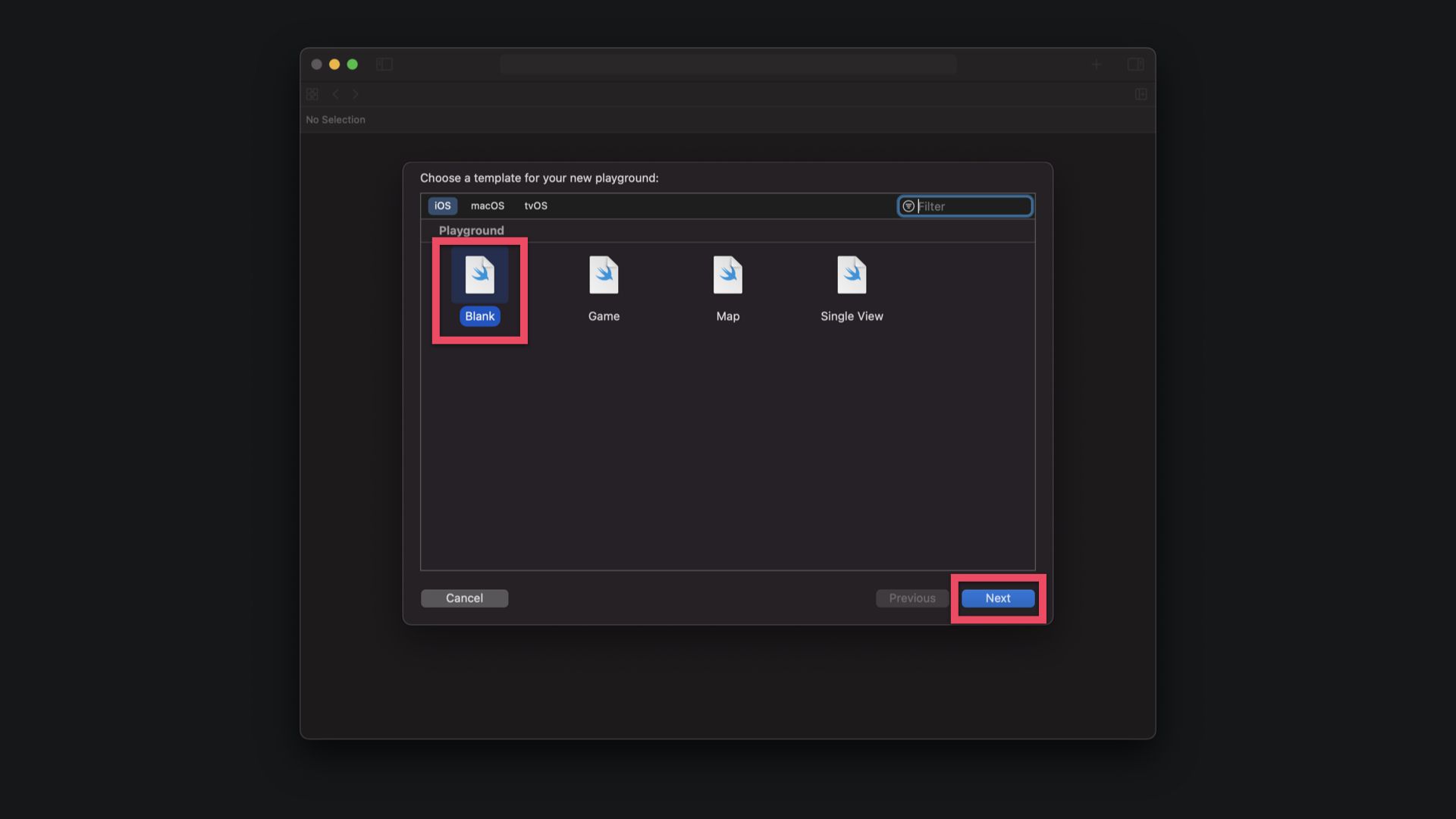

When starting Xcode, use the menu bar to select File > New > Playground... or use the Shift⇧ + Option⌥ + CMD⌘ + N keyboard shortcut to create a new Playground. The following setup wizard will guide you through the steps of configuring the basic settings of the Playground.

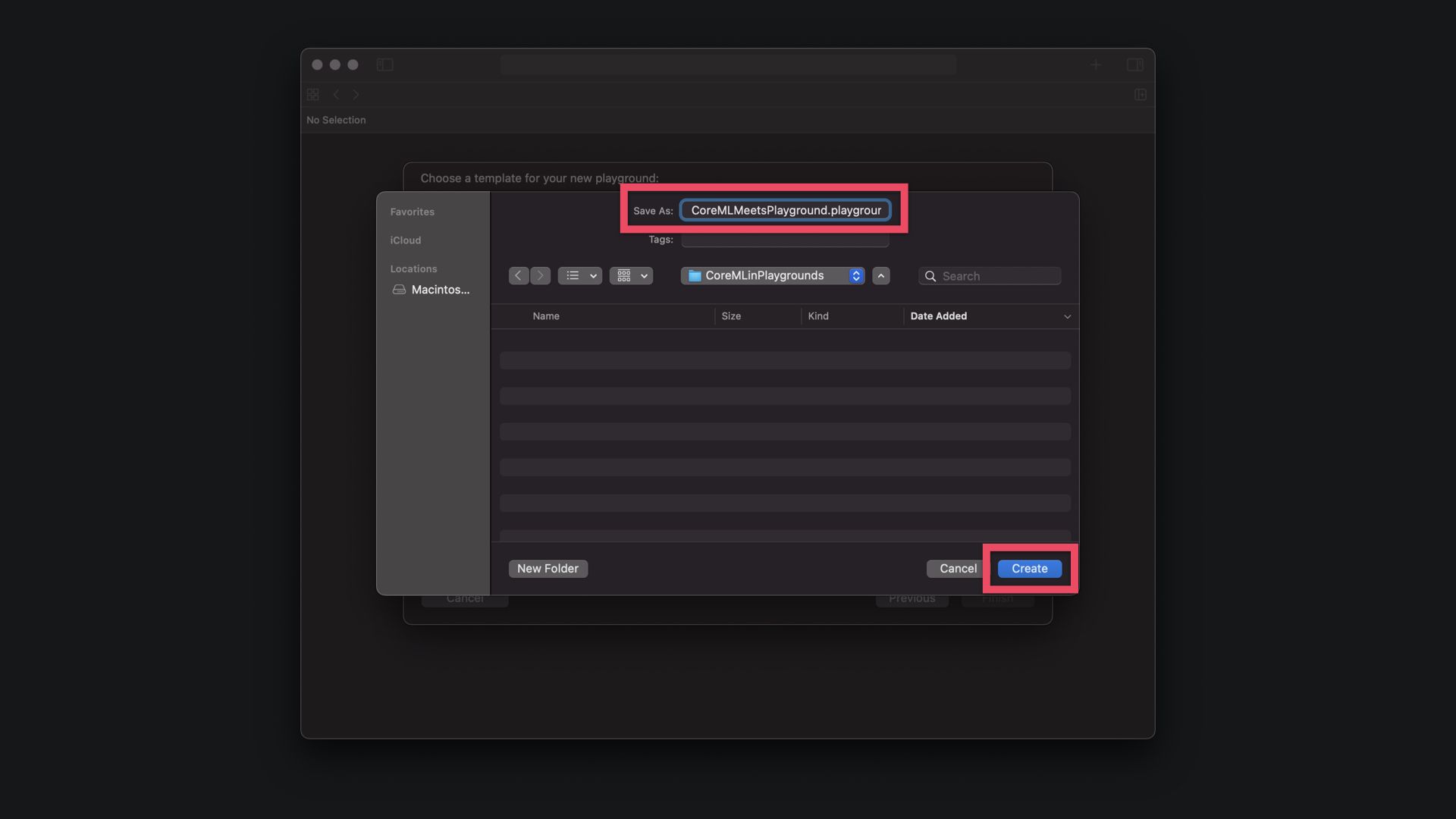

Choose "Blank" and then click "Next" on bottom right. In the following step, choose a file name to save the Playground and click "Create". Xcode will create the Playground files and open the editor.

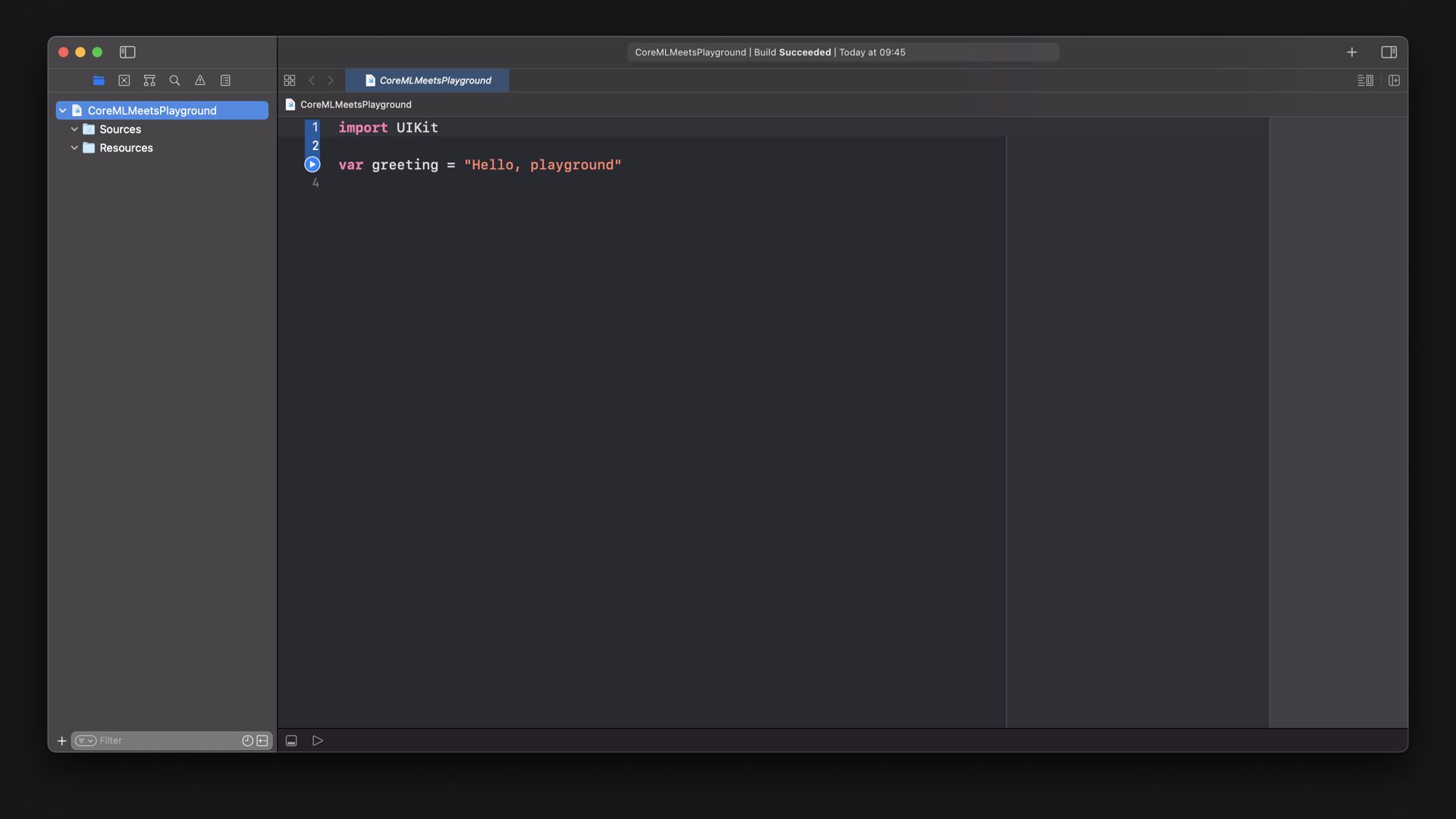

The Playground opens with a simple "Hello, playground" String in the main editor. If not open already, click on the Navigator icon to show the sidebar. The Playground has a Sources and a Resources folder in which additional files can be stored. The sources folder may contain other source code files, while the resources folder may contain any types of files, such as images, text files or in our case the machine learning model.

Now, let's see how to work with machine learning models inside Playground.

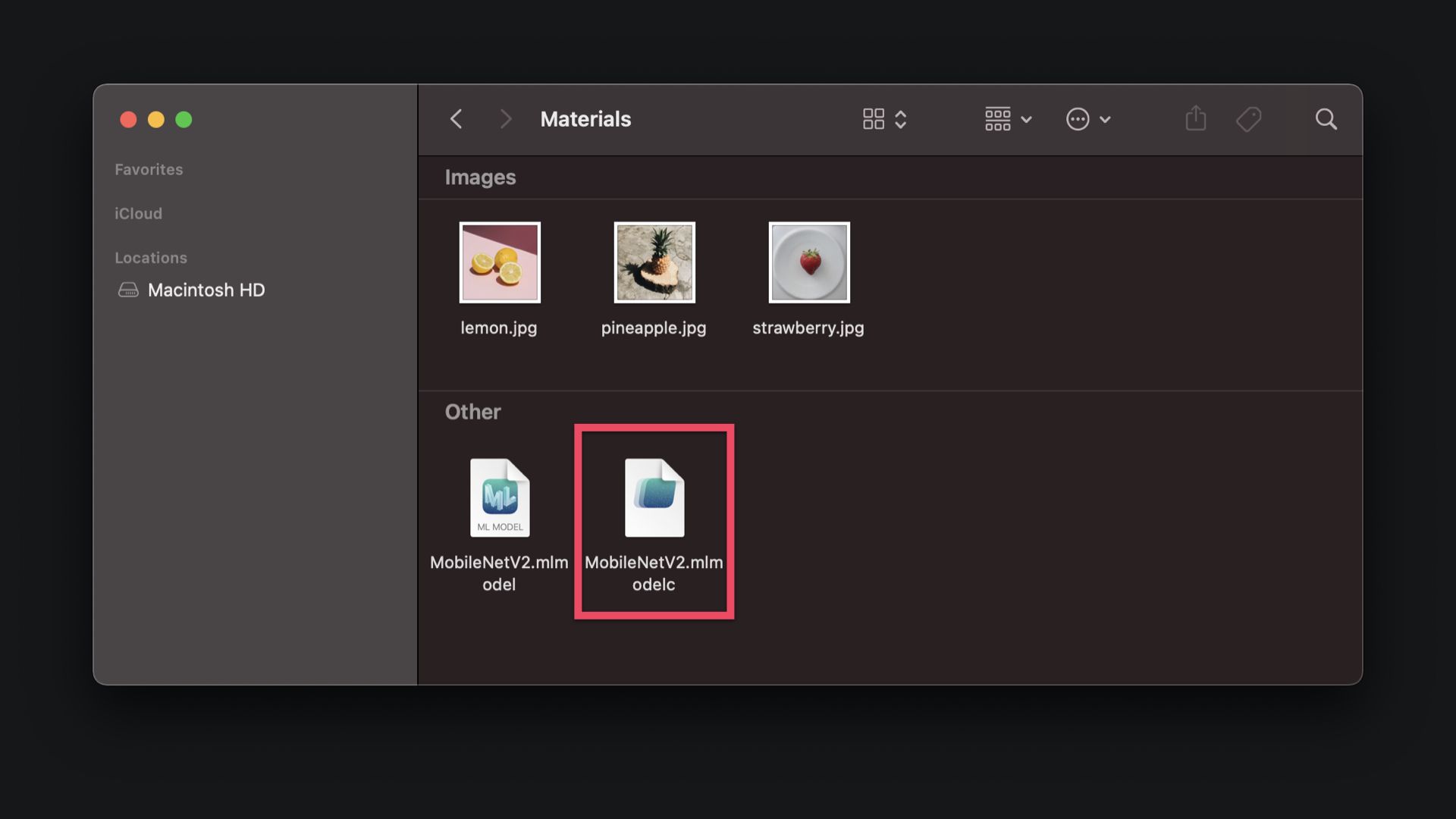

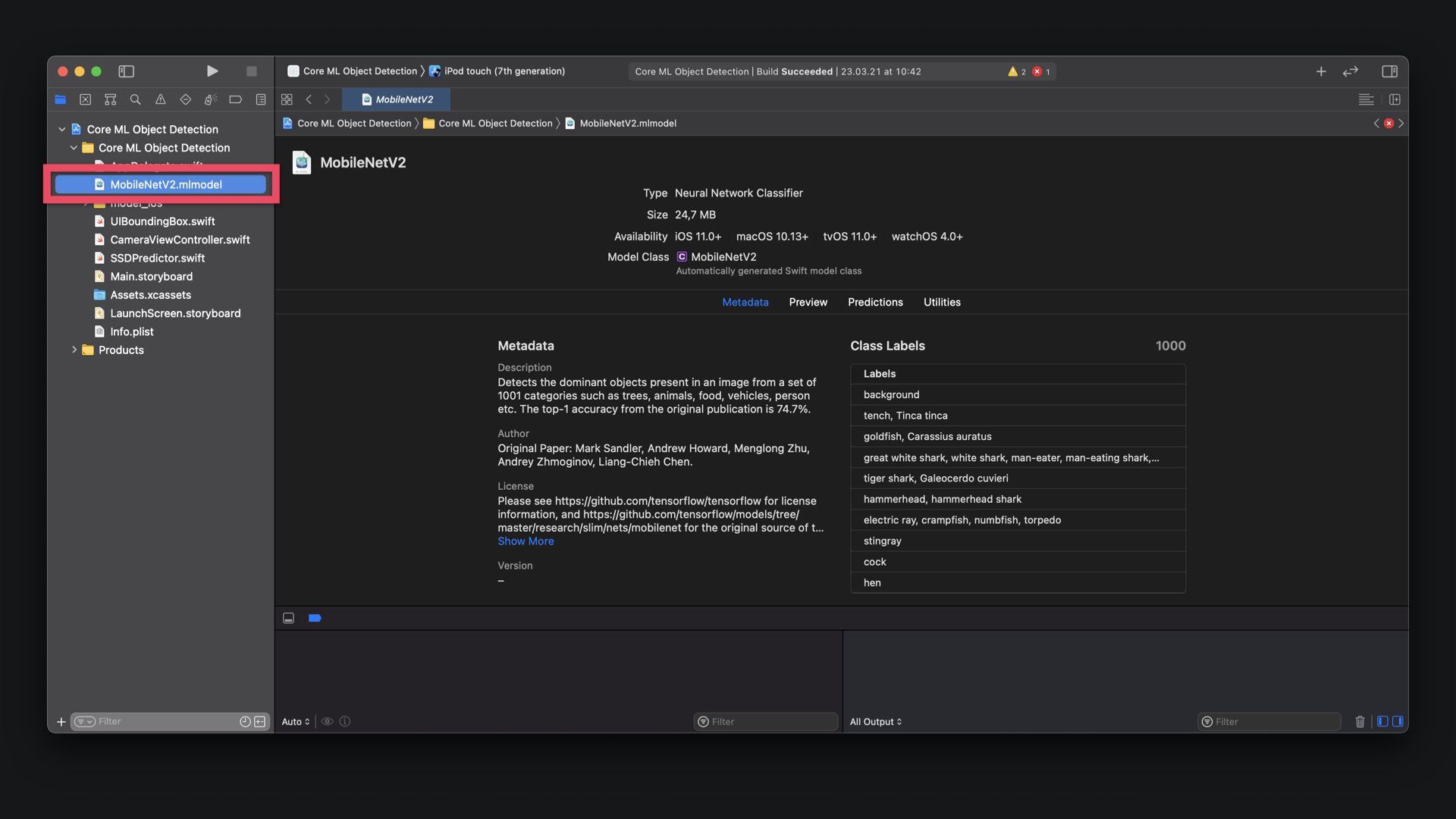

In order to work in Playground, any machine learning model needs to be added as a compiled model binary with .mlmodelc extension, which you can find in the Derived Data folder once any app using the model has been built in Xcode.

The .mlmodelc is generated automatically by Xcode when the model file with .mlmodel extension is added to any Xcode Project once the project is compiled and build.

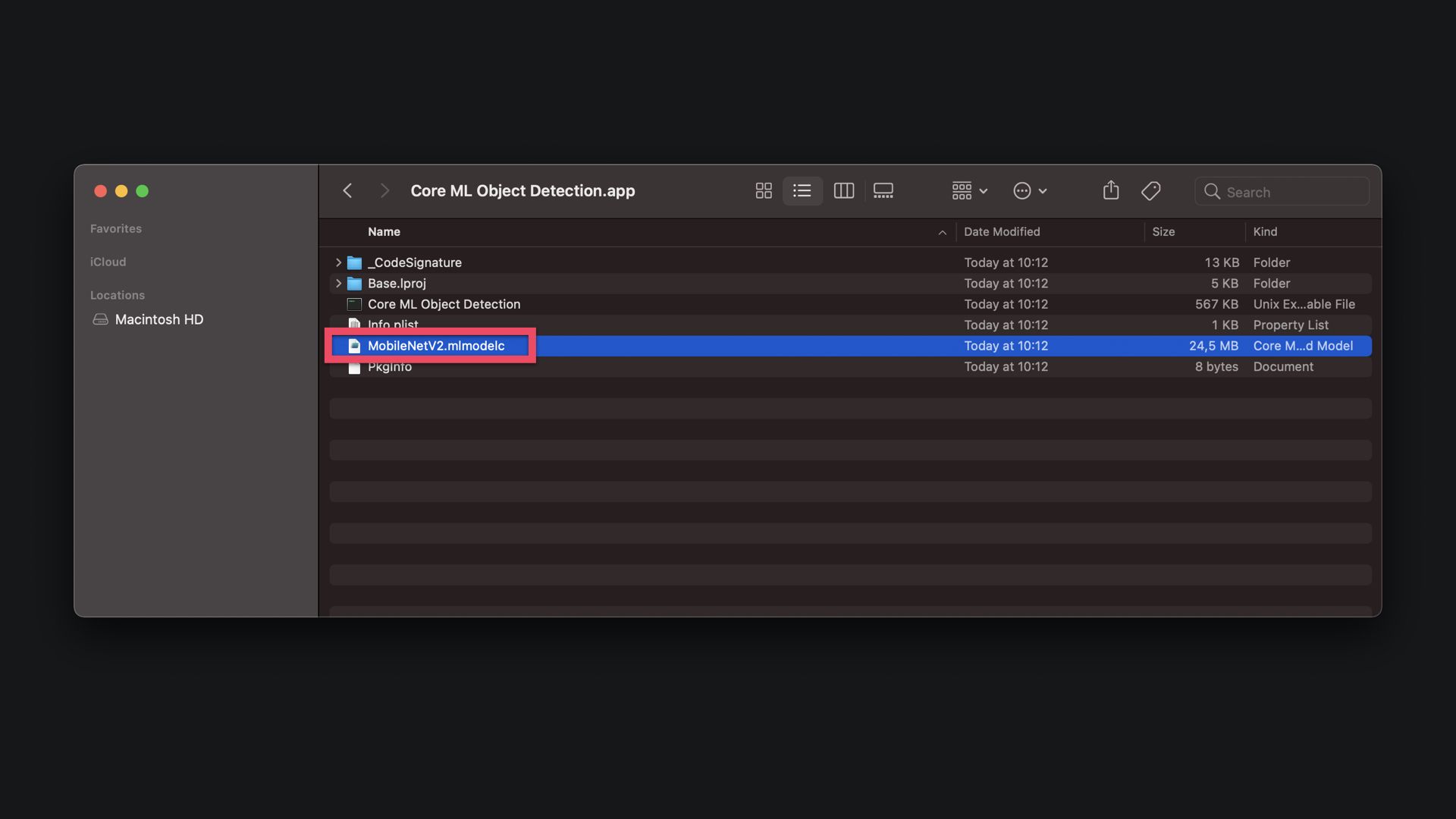

It can be found in the ~/Library/Developer/Xcode/DerivedData where all projects are build by default. Open the corresponding app’s folder and navigate to the "Build" and then "Products" folder. Inside there will be a "Debug" folder and this will contain the .app bundle. Control click on the app and select "Show Package Contents". Inside you will find a .mlmodelc file, which is the compiled binary of the model. Copy this into your Playground to use the model.

In the corresponding projects folder it is located in /Build/Products/Debug-iphonesimulator/NameOfTheApp/NameOfTheMachineLearningModerl.mlmodelc.

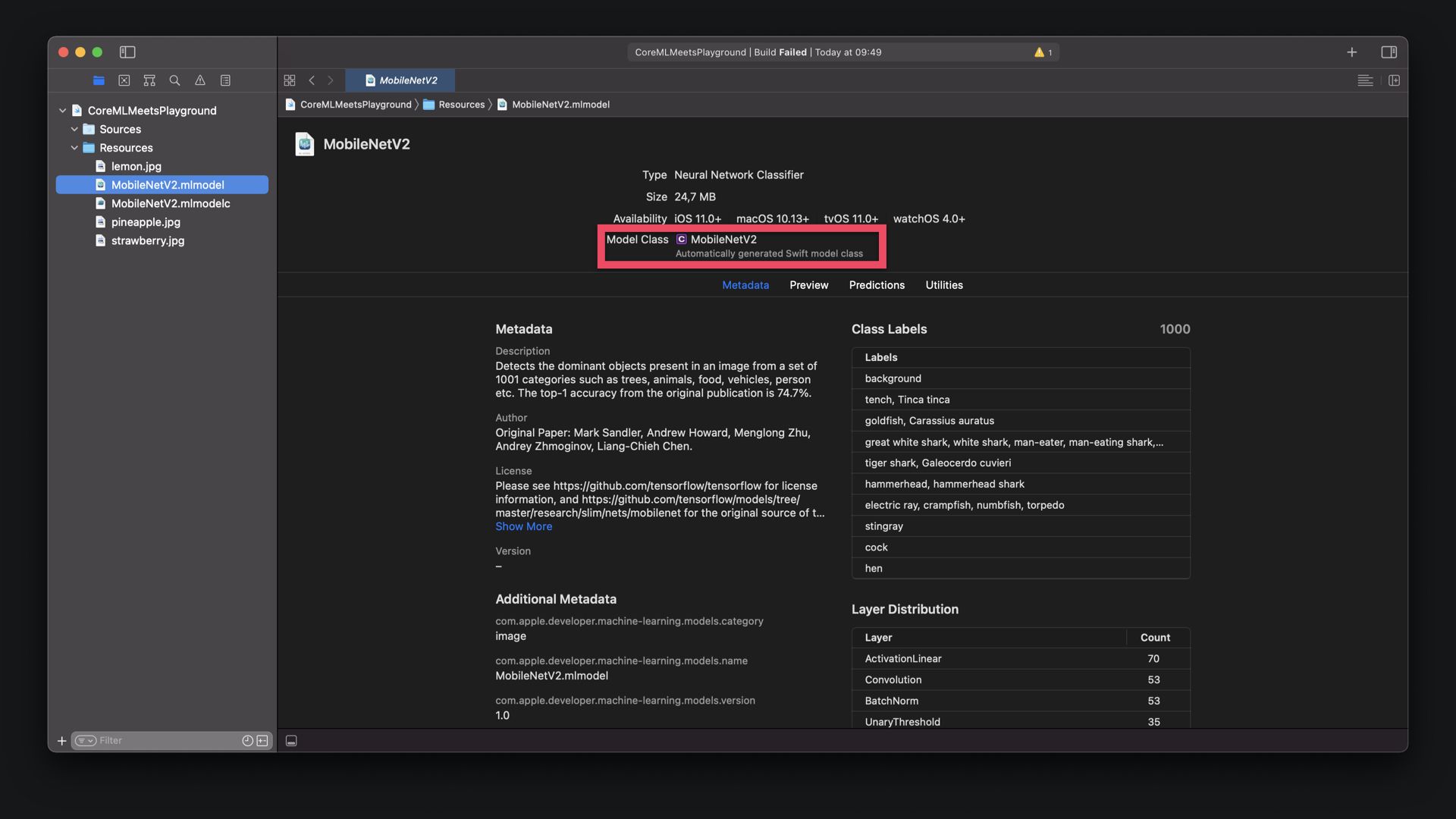

The .mlmodelc file can be added via drag and drop to the Playground's Resources folder. The tutorial is using the MobileNetV2 Core ML model. It can be downloaded from the Apple Core ML website.

For you convenience, the .mlmodelc file can also be downloaded here alongside some sample images that can later be used to test the model in Playground. Add the images to the Playground's Resources folder by dragging and dropping them in it.

Also the Core ML .mlmodel file can be added to the Playground's Resources folder. Once added, the Model Class named MobileNetV2 can be opened by double clicking on the symbol in the Metadata tab of the model. It will be opened in a new tab in the Playground.

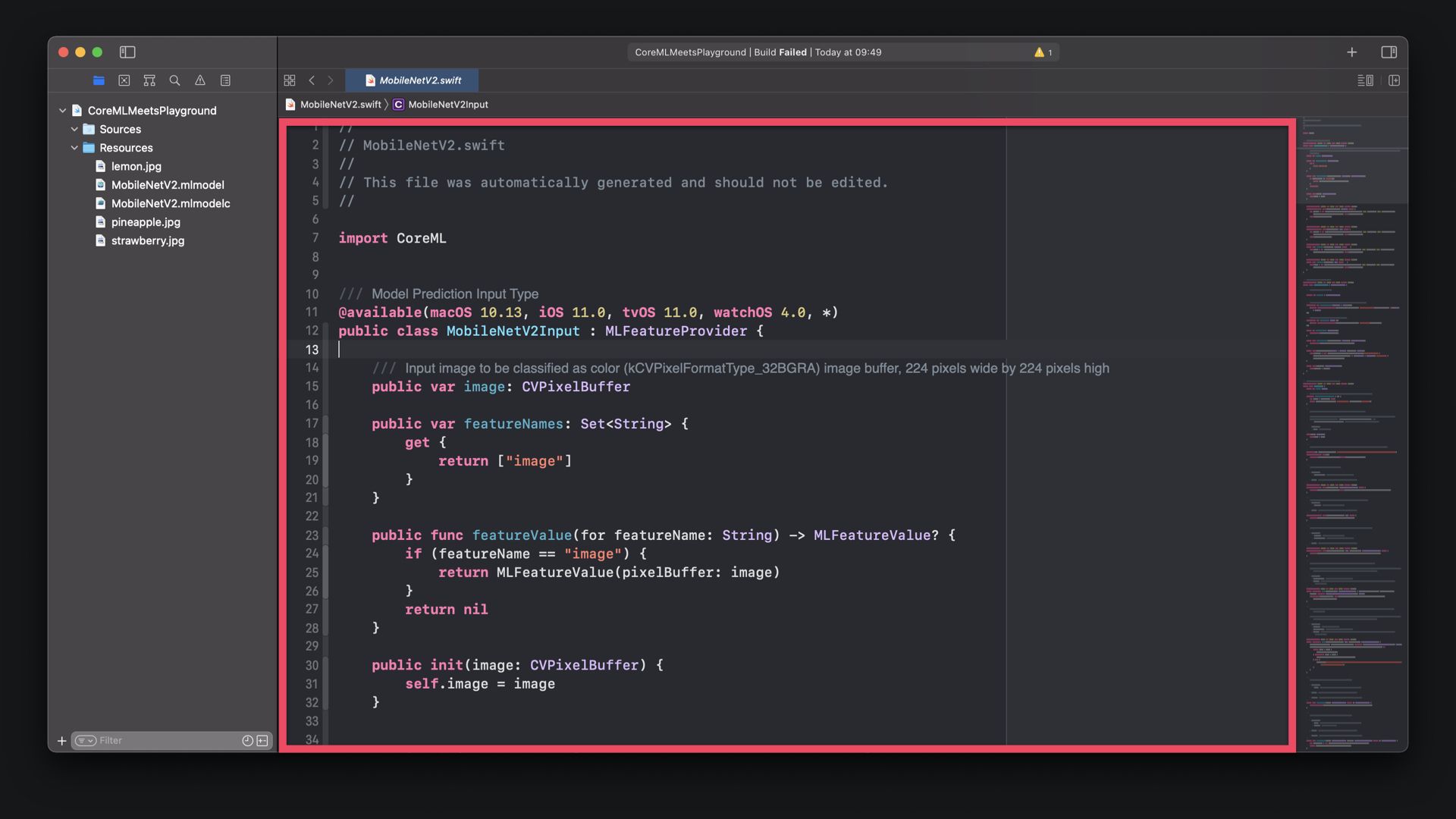

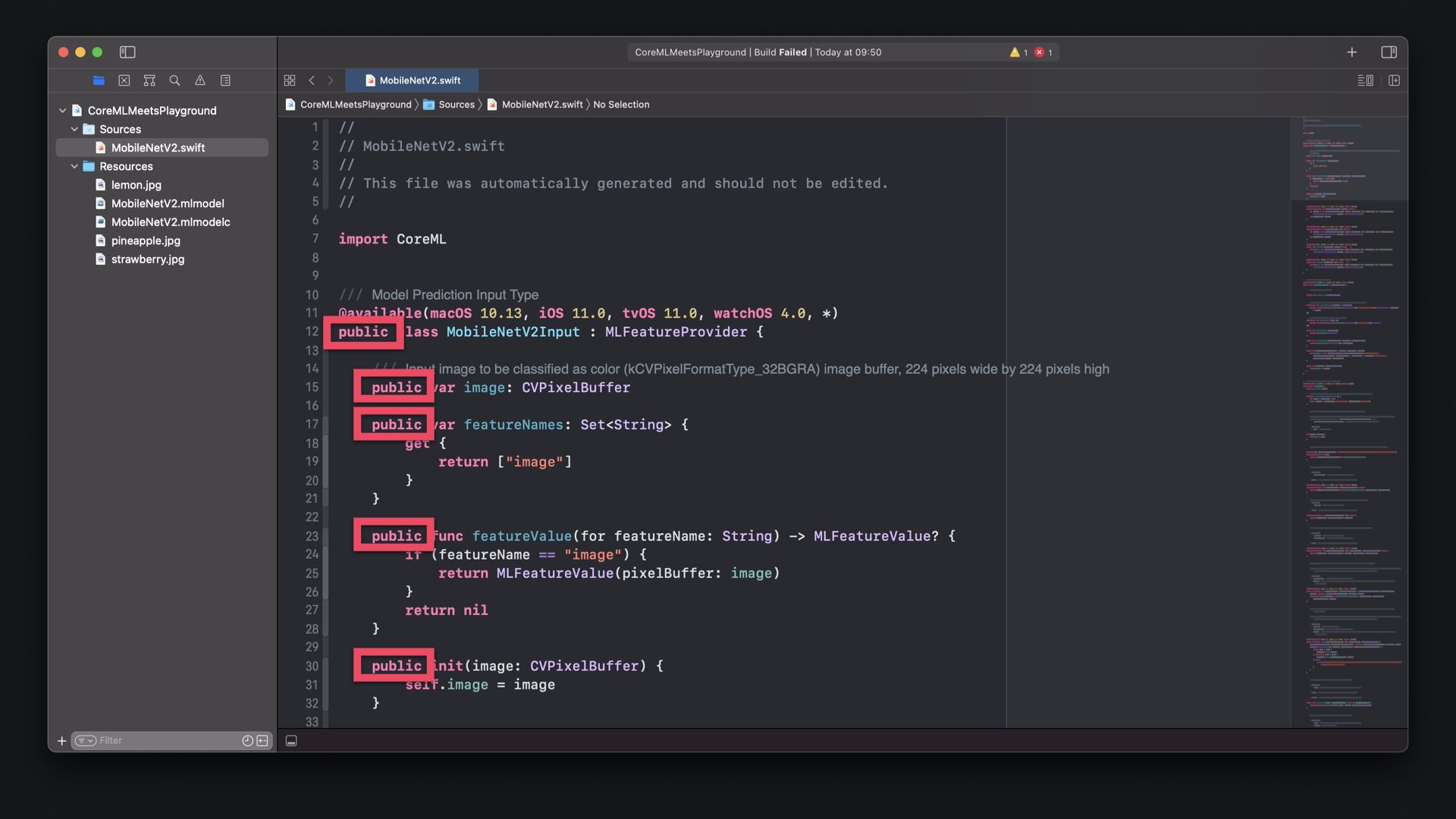

The MobileNetV2.swift contains all the necessary code to use the Core ML model. For the purpose of the this tutorial it is not critical to understand the entire code of the class. To use the Core ML model in Playground, copy the entire code and then close the file.

Within the Playground go to the menu and select File > New > File or use the CMD⌘ + N keyboard shortcut to create a new source file. Use the name MobileNetV2.swift and paste in the entire code from the Model Class you just copied.

By default the Swift auto-generated stub sources have internal protection levels. All classes, their properties, functions and initialisers have to be made public for Swift Playground to access the source, as any source that is not part of a Playground page needs to have public accessibility to be usable from a Playground page.

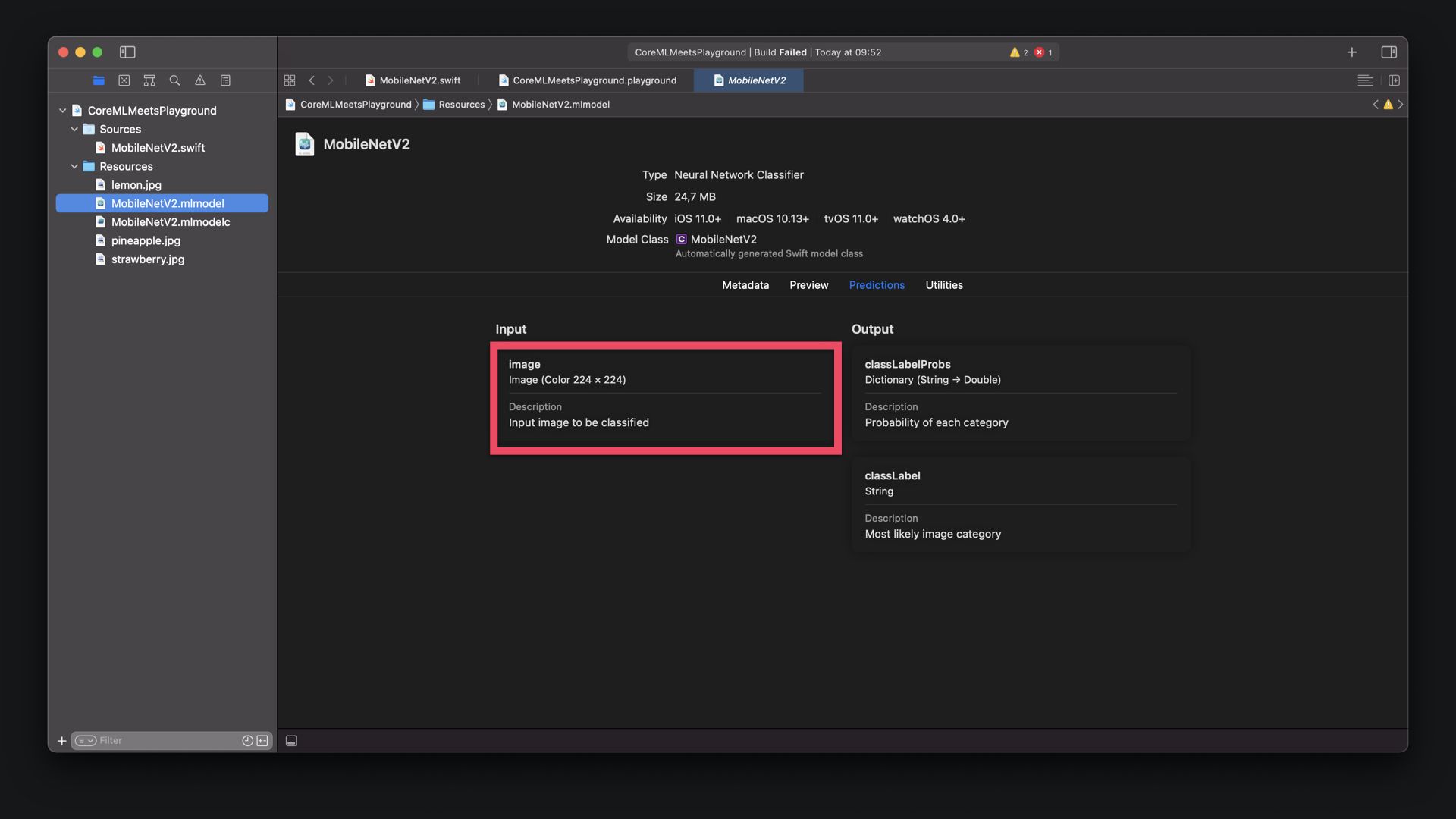

In the Predictions tab of the .mlmodel file, the input parameters for the Core ML model can be verified. In this case, the MobileNetV2 model expects squared images with dimensions of 224 x 224 to execute the classification.

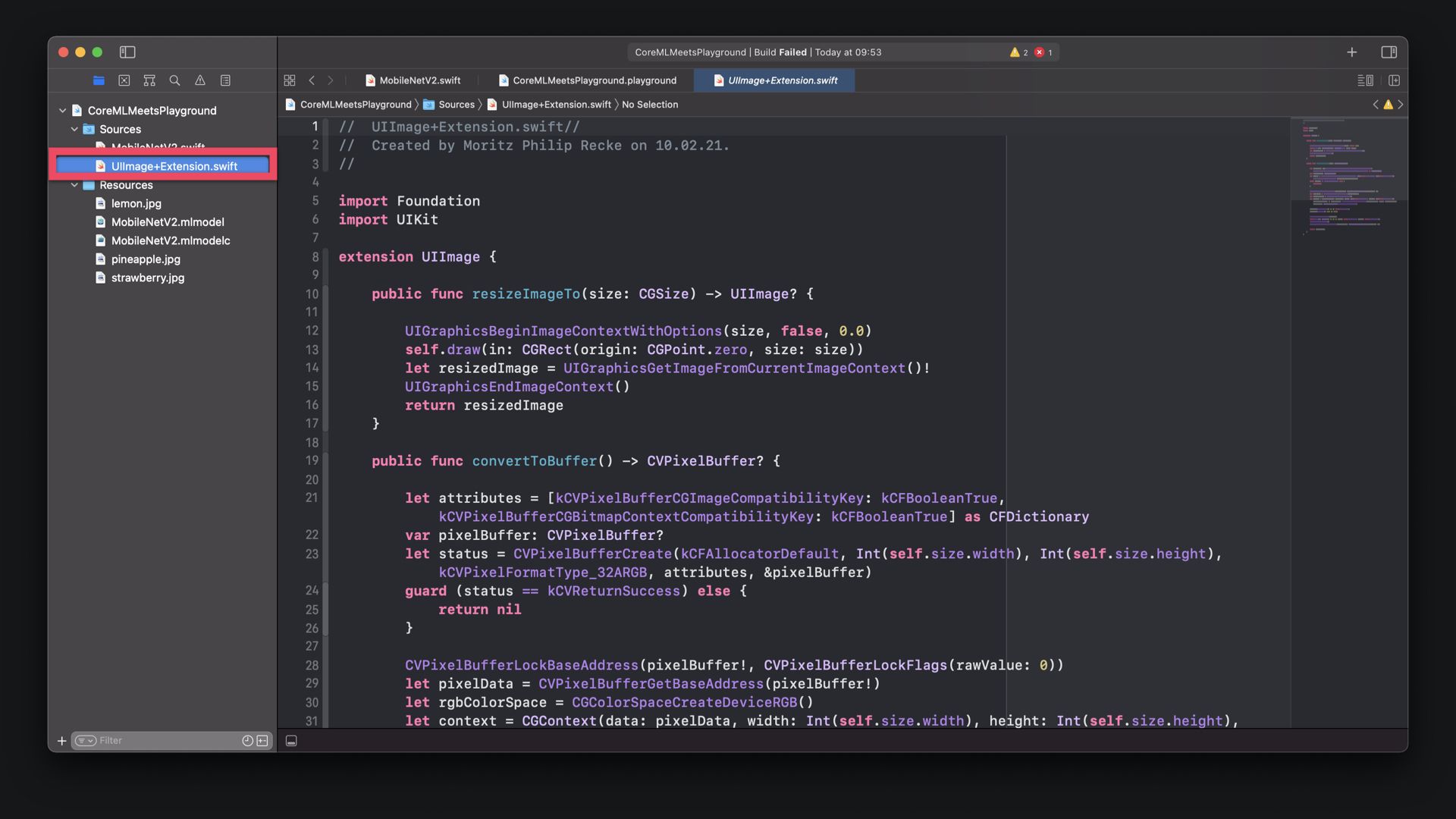

As a consequence, this means that we have to resize any image to that dimensions to be accepted. Also, the input type is not an UIImage but either a Core Image Image (CIIMage), Core Graphics Image (CGImage) or a Core Video Pixel Buffer (CVPixelBuffer). To create the CVPixelBuffer, the image has to be converted, which is not a trivial task. The easiest way to achieve these goals is adding some extensions to UIImage.

For the purpose of the tutorial, feel free to use this GitLab snippet to copy the source code for the UIImage+Extension.swift file used in this tutorial. However, for usage in Playground, the functions have to be made public to be usable within the main Playground page. Inside the UIImage+Extension.swift you can see see to the class:

resizeImageTo(): which will resize the image to a provided size and return a resizedUIImage.convertToBuffer(): which will convert theUIImageto aCVPixelBuffer.

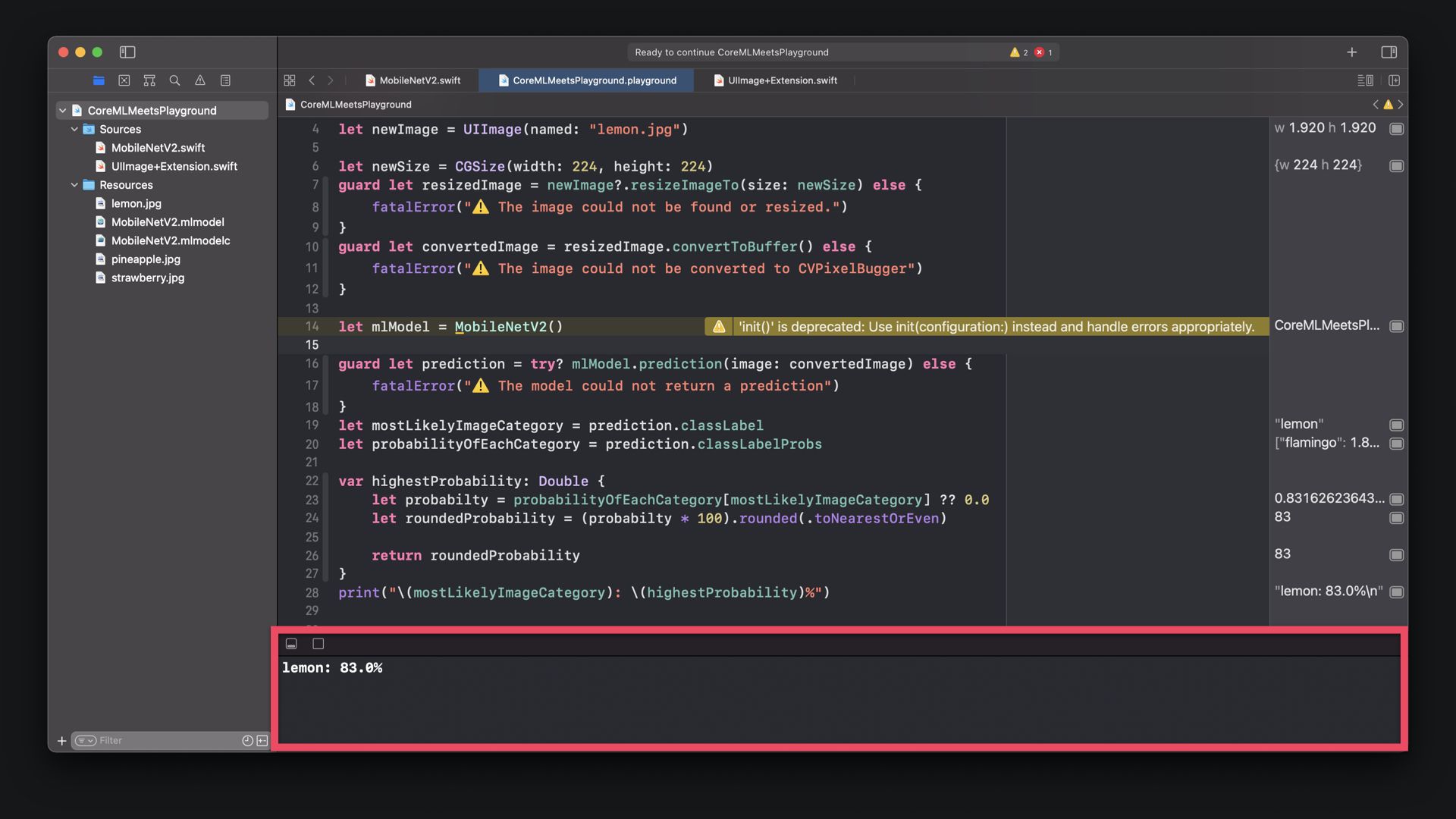

On the main Playground page, import Core ML to use the framework within the page. Create a constant newImage as an UIImage using one of the sample images and prepare it to be used with the model by resizing and converting it the a CVPixelBuffer.

// 1. Load the image from the 'Resources' folder.

let newImage = UIImage(named: "lemon.jpg")

// 2. Resize the image to the required input dimension of the Core ML model

// Method from UIImage+Extension.swift

let newSize = CGSize(width: 224, height: 224)

guard let resizedImage = newImage?.resizeImageTo(size: newSize) else {

fatalError("⚠️ The image could not be found or resized.")

}

// 3. Convert the resized image to CVPixelBuffer as it is the required input

// type of the Core ML model. Method from UIImage+Extension.swift

guard let convertedImage = resizedImage.convertToBuffer() else {

fatalError("⚠️ The image could not be converted to CVPixelBugger")

}Now you can create an instance of the Core ML model to classify the image by using a prediction.

In the .mlmodel file, you can also check the output parameters for the Core ML model. In this case, the MobileNetV2 model will deliver two outputs:

- A classLabel as String with the most likely classification;

- A classLabelProbs dictionary of Strings and Doubles with the probability for each classification category.

This might come in handy in the following implementation. It will check the results of the predictions and print most likely results to the console:

// 1. Create the ML model instance from the model class in the 'Sources' folder

let mlModel = MobileNetV2()

// 2. Get the prediction output

guard let prediction = try? mlModel.prediction(image: convertedImage) else {

fatalError("⚠️ The model could not return a prediction")

}

// 3. Checking the results of the prediction

let mostLikelyImageCategory = prediction.classLabel

let probabilityOfEachCategory = prediction.classLabelProbs

var highestProbability: Double {

let probabilty = probabilityOfEachCategory[mostLikelyImageCategory] ?? 0.0

let roundedProbability = (probabilty * 100).rounded(.toNearestOrEven)

return roundedProbability

}

print("\(mostLikelyImageCategory): \(highestProbability)%")

The entire code for this tutorial can be found in this Gitlab Snippet. It allows you to use both object detection as well as image classification models in Swift Playground. Similarly, this also works for other Core ML models.

This tutorial is part of a series of articles derived from the presentation Creating Machine Learning Models with Create ML presented as a one time event at the Swift Heroes 2021 Digital Conference on April 16th, 2021.

Where to go next?

If you are interested into knowing more about using Object Detection models or Core ML in general you can check other tutorials on:

- UIImage+CVPixelBuffer: Converting an UIImage to a Core Video Pixel Buffer

- Core ML Explained: Apple's Machine Learning Framework

- Use an Object Detection Machine Learning Model in Swift Playgrounds

- Use an Object Detection Machine Learning Model in an iOS App

- Use an Image Classification Machine Learning in an iOS App with SwiftUI

Recommended Content provided by Apple

For a deeper dive on the topic of creating object detection machine learning models, you can watch the videos released by Apple on WWDC:

- WWDC 2018 - Getting the Most out of Playgrounds in Xcode

- WWDC 2019 - Swift Playgrounds 3

- WWDC 2020 - Explore Packages and Projects with Xcode Playgrounds

Other Resources

If you are interested into knowing more about using machine learning models or Core ML, you can go through these resources:

- To understand how to use a custom Vision & Core ML model in Swift Playgrounds, explore Emotion recognition for cats — Custom Vision & Core ML on a Swift Playground.

- To understand how to integrate a Core ML model in your app, check out this sample code provided by Apple.

- To learn how to use Core Data in Swift Playgrounds, follow this tutorial.