Weekly Newsletter Issue 34

Weekly newsletter summing up our publications and showcasing app developers and their amazing creations.

Welcome to this week's edition of our newsletter.

During its multi-day event this week, Apple introduced a refreshed Mac lineup, including new iMacs, a new Mac mini, and MacBook Pro powered by the M4 family of chips. Additionally, Apple upgraded the Magic Keyboard, Trackpad, and Mouse to USB-C.

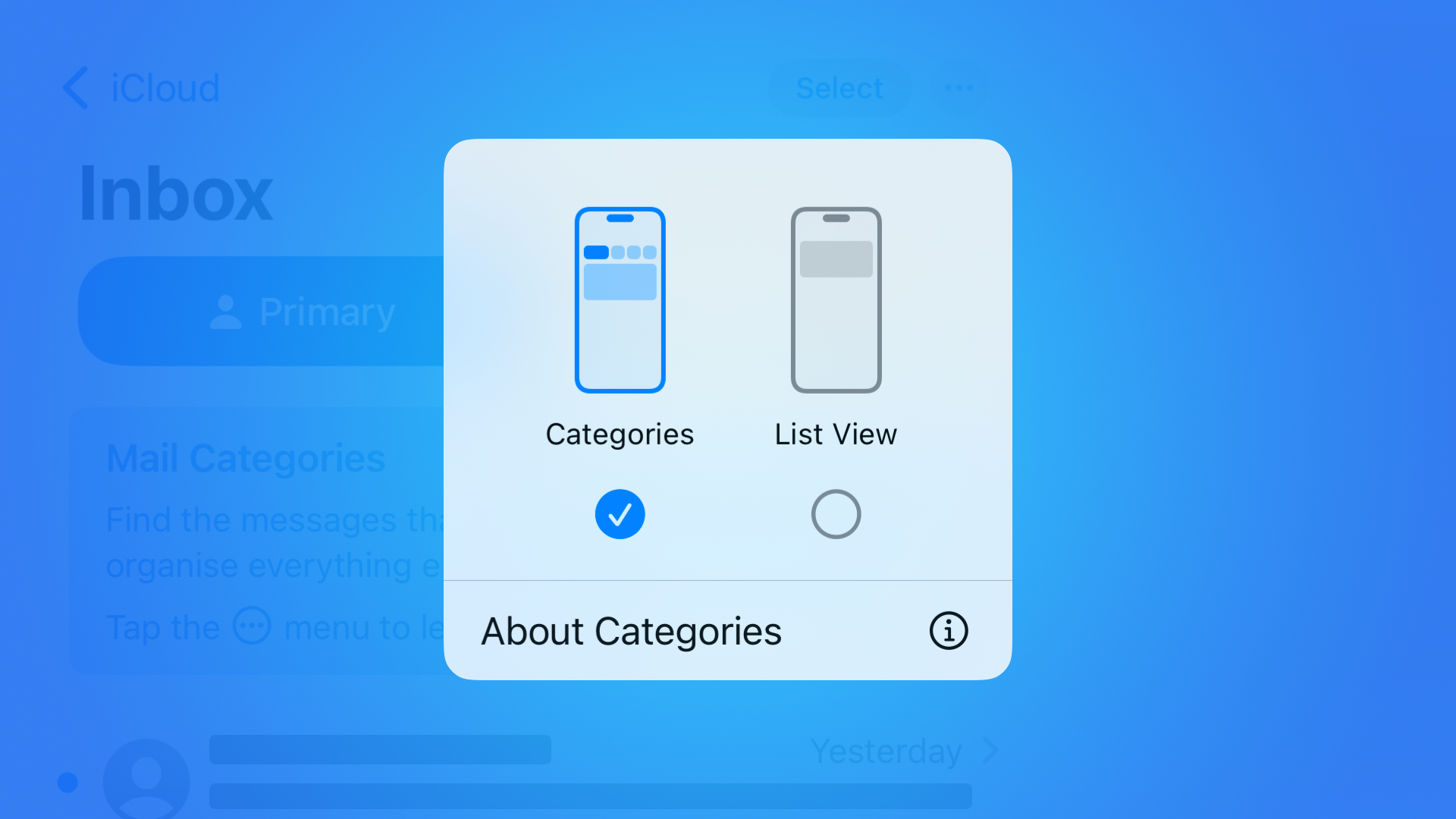

Apple Intelligence launched in the U.S. last week with the iOS 18.2 beta and is now rolling out to everyone with iOS 18.1. While EU users can access it in U.S. English on the Mac after joining a waitlist, full access to Apple Intelligence on iPhone and iPad will begin in April, as noted in recent European Apple Newsroom articles.

Published this week

This week we have covered Vision, SwiftUI and Apple Intelligence.

Identifying attention areas in images with Vision Framework

Tiago shows how to use the Vision Framework to perform Saliency Analysis on images to identify areas in an image that attract the most attention.

Implement blurring when multitasking in SwiftUI

Giovanni provides a short step-by-step guide on implementing automatic screen blurring in SwiftUI using the scenePhase to detect when the app enters multitasking or background mode and applies a blur effect to ensure sensitive content is obscured when the app is inactive.

Exploring Apple Intelligence: Writing Tools

Matteo and Antonella start a new series of articles exploring the features powered by Apple Intelligence. Starting with Writing Tools, covering how it works and how to ensure support for it in your application.

Make it Intelligent

We’re releasing a new section of Create with Swift, Make It Intelligent!

This page is a comprehensive collection of articles and tutorials designed to help you navigate our content, making it easier to integrate Core ML, Vision, Natural Language, and other intelligent features seamlessly into your apps.

Dive into the resources and insights for developing adaptive and intelligent applications using machine learning and AI on Apple platforms.

From the community

Here are our highlights of articles and resources created by the app developer community.

Introducing Swift Testing. Basics.

Majid explores Swift Testing. In this first article from the series, he explores the basics and how to use the #expect and the #require macros.

Custom Views in UIMenu

Seb discusses how to use private APIs in UIMenu on iOS, providing code examples for creating interactive custom menu elements and headers. Even if using these undocumented APIs in apps can lead to App Store rejections, this article remains an interesting read.

Understanding actors in Swift

Natascha introduces the concept of actors in Swift to prevent data races by isolating mutable data in concurrent environments and Actor's non-isolated declarations for scenarios requiring synchronous access.

Improve your app's UX with SwiftUI's task view modifier

Peter discusses the task view modifier and the Task.sleep(for:) method to then create a reusable delayed task modifier extending SwiftUI’s existing one.

Indie App of the Week

Word??

Inspired by personal experience with a family member, Lalo developed Word?? to support individuals with aphasia and other language disorders, enabling effective communication through message pinning, machine learning-powered word suggestions, and drawing recognition.

This project highlights how advanced device features can significantly enhance accessibility for people with communication challenges. The project is currently a work in progress, and a public repository is available to show how some of the app features are implemented.

This week, GitHub Copilot came to Xcode!

The popular AI coding assistant is now available in beta for developers. The new Xcode extension seamlessly integrates into your development workflow, providing direct inline coding suggestions as you type.

We can’t wait to see what you will Create with Swift.

See you next week!